3 Elements of Bayesian statistics

As human beings we make our decisions on what has happened to us in the past. For example, we trust a person or a company more, when we can look back at a series of successful transactions. And we have remarkable capability to recall what has just happened, but also what happened yesterday or years ago. By integrating over all the evidence, we form a view of the world we forage. When evidence is abundant, we vigorously experience a feeling of certainty, or lack of doubt. That is not to deny, that in a variety of situations, the boundedness of the human mind kicks in and we become terrible decision makers. This is for a variety of psychological reasons, to name just a few:

- plain forgetting

- the primacy effect: recent events get more weight

- confirmation bias: evidence that supports a belief is actively sought for, counter-evidence gets ignored.

- the hindsight bias: once a situation has taken a certain outcome, we believe that it had to happen that way.

The very aim of scientific research is to avoid the pitfalls of our minds and act as rational as possible by translating our theory into a formal model of reality, gathering evidence in an unbiased way and weigh the evidence by formal procedures. This weighing of evidence using data essentially is statistical modeling and statistical models in this book all produces two sorts of numbers: magnitude of effects and level of certainty. In applied research, real world decisions depend on the evidence, which has two aspects: first, the strength of effects and the level of certainty we have reached.

Bayesian inferential statistics grounds on the idea of accumulating evidence, where past data is not lost. Certainty (or strength of belief or credibility or credence) in Bayesian statistics is formalized as a probability scale (0 = impossible, 1 = certain). The level of certainty is determined by two sources, everything that we already know about the subject and the data we just gathered. Both sources are only seemingly different, because when new data is analyzed, a transition occurs from what you new before, prior belief, to what you know after seeing the data, posterior belief. In other words: by data the current belief gets an update.

Updating our beliefs is essential for acting in rational ways. The first section of this chapter is intended to tell the Big Picture. It puts statistics into the context of decision-making in design research. For those readers with a background in statistics, this section may be a sufficient introduction all by itself.

In the remainder of this chapter the essential concepts of statistics and Bayesian analysis will be introduced from ground up. First we will look at descriptive statistics and I will introduce basic statistical tools, such as summary statistics and figures. Descriptive statistics can be used effectively to explore data and prepare the statistical modeling, but they lack one important ingredient: information about the level certainty.

3.4 first derives probability from set theory and relative frequencies, before we turn to the the famous Bayes theorem 3.4.5, followed by an introduction to Bayesian thinking 3.4.6.

Then we go on to basic concepts of statistical modeling, such as the likelihood and we finish with Bayes theorem, which does the calculation of the posterior certainty from prior knowledge and data. Despite the matter, I will make minimal use of mathematical formalism. Instead, I use R code as much as possible to illustrate the concepts. If you are not yet familiar with R, you may want to read chapter 2.2 first, or alongside.

Section 3.5 goes into the practical details of modeling. A statistical model is introduced by its two components: the structural part, which typically carries the research question or theory, followed by a rather deep account of the second component of statistical models: the random part.

3.1 Rational decision making in design research

- I see clouds. Should I take my umbrella?

- Should I do this bungee jump? How many people came to death by jumping? (More or less than alpine skiing?) And how much fun is it really?

- Overhauling our company website will cost us EUR 100.000. Is it worth it?

All the above cases are examples of decision making under uncertainty. The actors aim for maximizing their outcome, be it well being, fun or money. But, they are uncertain about what will really happen. And their uncertainty occurs on two levels:

- One cannot precisely foresee the exact outcome of one’s chosen action:

- Taking the umbrella with you can have two consequences: if it rains, you have the benefit of staying dry. If it does not rain, you have the inconvenience of carrying it with you.

- You don’t know if you will be the rare unlucky one, who’s bungee rope breaks.

- You don’t know by how much the new design will attract more visitors and how much the income will raise.

- It can be difficult to precisely determine the benefits or losses of potential outcomes:

- How much worse is your day when carrying a useless object with you? How much do you hate moisture? In order to compare the two, they must be assessed on the same scale.

- How much fun (or other sources of reward, like social acknowledgments) is it to jump a 120 meter canyon? And how much worth is your own life to you?

- What is the average revenue generated per visit? What is an increase of recurrence rate of, say, 50% worth?

Once you know the probabilities of all outcomes and the respective losses, decision theory provides an intuitive framework to estimate these values. Expected utility \(U\) is the sum product of outcome probabilities \(P\) and the involved losses.

In the case of the umbrella, the decision is between two options: taking an umbrella versus taking no umbrella, when it is cloudy.

\[ \begin{aligned} &P(\text{rain}) &&&=& 0.6 \\ &P(\text{no rain}) &=& 1 - P(\text{rain}) &=& 0.4 \\ &L(\text{carry}) &&&=& 2 \\ &L(\text{wet}) &&&=& 4 \\ &U(\text{umbrella}) &=& P(\text{rain}) L(\text{carry}) + P(\text{no rain}) L(\text{carry}) = L(\text{carry}) &=& 2\\ &U(\text{no umbrella}) &=& P(\text{rain}) L(\text{wet}) &=& 2.4 \end{aligned} \]

Tables 3.1 through 3.3 develop the calculation of expected utilities U in a step-by-step manner:

attach(Rainfall)Outcomes <-

tibble(

outcome = c("rain", "no rain"),

prob = c(0.6, 0.4)

)

Outcomes | outcome | prob |

|---|---|

| rain | 0.6 |

| no rain | 0.4 |

Actions <-

tibble(action = c("umbrella", "no umbrella"))

Losses <-

expand.grid(

action = Actions$action,

outcome = Outcomes$outcome

) %>%

join(Outcomes) %>%

mutate(loss = c(2, 4, 2, 0))

Losses | action | outcome | prob | loss |

|---|---|---|---|

| umbrella | rain | 0.6 | 2 |

| no umbrella | rain | 0.6 | 4 |

| umbrella | no rain | 0.4 | 2 |

| no umbrella | no rain | 0.4 | 0 |

Utility <-

Losses %>%

mutate(conditional_loss = prob * loss) %>%

group_by(action) %>%

summarise(expected_loss = sum(conditional_loss))

Utility | action | expected_loss |

|---|---|

| umbrella | 2.0 |

| no umbrella | 2.4 |

We conclude that, given the high chance for rain, and the conditional losses, the expected loss is larger for not taking an umbrella with you. It is rational to take an umbrella when it is cloudy.

3.1.1 Measuring uncertainty

As we have seen above, a decision requires two investigations: outcomes and their probabilities, and the assigned loss. Assigning loss to decisions is highly context dependent and often requires domain-specific expertise. The issues of probabilistic processes and the uncertainty that arises from them is basically what the idea of New Statistics represents. We encounter uncertainty in two forms: first, we usually have just a limited set of observations to draw inference from, this is uncertainty of parameter estimates. From just 20 days of observation, we cannot be absolutely certain about the true chance of rain. It can be 60%, but also 62% or 56%. Second, even if we precisely knew the chance of rain, it does not mean we could make a certain statement of the future weather conditions, which is predictive uncertainty. For a perfect forecast, we had to have a complete and exact figure of the physical properties of the atmosphere, and a fully valid model to predict future states from it. For all non-trivial systems (which excludes living organisms and weather), this is impossible.

Review the rainfall example: the strategy of taking an umbrella with you has proven to be superior under the very assumption of predictive uncertainty. As long as you are interested in long-term benefit (i.e. optimizing the average loss on a long series of days), this is the best strategy. This may sound obvious, but it is not. In many cases, where we make decisions under uncertainty, the decision is not part of a homogeneous series. If you are member of a startup team, you only have this one chance to make a fortune. There is not much opportunity to average out a single failure at future occasions. In contrast, the investor, who lends you the money for your endeavor, probably has a series. You and the investor are playing to very different rules. For the investor it is rational to optimize his strategy towards a minimum average loss. The entrepreneur is best advised to keep the maximum possible loss at a minimum.

As we have seen, predictive uncertainty is already embedded in the framework of rational decision making. Some concepts in statistics can be of help here: the uncertainty regarding future events can be quantified (for example, with posterior predictive distributions 4.1.3 and the process of model selection can assist in finding the model that provides the best predictive power.

Still, in our formalization of the Rainfall case, what magically appears are the estimates for the chance of rain. Having these estimates is crucial for finding an optimal decision, but they are created outside of the framework. Furthermore, we pretended to know the chance of rain exactly, which is unrealistic. Estimating parameters from observations is the reign of statistics. From naive calculations, statistical reasoning differs by also regarding uncertainty of estimates. Generally, we aim for making statements of the following form:

“With probability \(p\), the attribute \(A\) is of magnitude \(X\).”

In the umbrella example above, the magnitude of interest is the chance of rain. It was assumed to be 60%. This appears extremely high for an average day. A more realistic assumption would be that the probability of rainfall is 60% given the observation of a cloudy sky. How could we have come to the belief that with 60% chance, it will rain when the sky is cloudy? We have several options, here:

- Supposed, you know that, on average, it rains 60% of all days, it is a matter of common sense, that the probability of rain must be equal or larger than that, when it’s cloudy.

- You could go and ask a number of experts about the association of clouds and rain.

- You could do some systematic observations yourself.

Imagine, you have recorded the coincidences of clouds and rainfall over a period, of, let’s say 20 days (Table 3.4) :

Rain| Obs | cloudy | rain |

|---|---|---|

| 2 | TRUE | TRUE |

| 6 | FALSE | FALSE |

| 7 | FALSE | FALSE |

| 10 | TRUE | TRUE |

| 11 | TRUE | TRUE |

| 14 | TRUE | TRUE |

| 15 | FALSE | TRUE |

| 20 | FALSE | FALSE |

Intuitively, you would use the average to estimate the probability of rain under every condition (Table 3.5).

Rain %>%

group_by(cloudy) | Obs | cloudy | rain |

|---|---|---|

| 1 | TRUE | FALSE |

| 2 | TRUE | TRUE |

| 3 | TRUE | FALSE |

| 4 | FALSE | FALSE |

| 5 | TRUE | TRUE |

| 6 | FALSE | FALSE |

| 7 | FALSE | FALSE |

| 8 | FALSE | FALSE |

| 9 | FALSE | TRUE |

| 10 | TRUE | TRUE |

| 11 | TRUE | TRUE |

| 12 | TRUE | TRUE |

| 13 | FALSE | FALSE |

| 14 | TRUE | TRUE |

| 15 | FALSE | TRUE |

| 16 | TRUE | FALSE |

| 17 | FALSE | TRUE |

| 18 | FALSE | FALSE |

| 19 | TRUE | FALSE |

| 20 | FALSE | FALSE |

These probabilities we can feed into the decision framework as outlined above. The problem is, that we obtained just a few observations to infer the magnitude of the parameter \(P(rain|cloudy) = 60\)%. Imagine, you would repeat the observation series on another 20 days. Due to random fluctuations, you would get a more or less different series and different estimates for the probability of rain. More generally, the true parameter is only imperfectly represented by any sample, it is not unlikely, that it is close to the estimate, but it could be somewhere else, for example, \(P(rain|cloudy) = 58.719\)%.

The trust you put in your estimation is called level of certainty or belief or confidence. It is the primary aim of statistics to rationally deal with uncertainty, which involves to measure the level of certainty associated with any statement derived from teh data. So, what would be a good way to determine certainty? Think for a moment. If you were asking an expert, how would you do that to learn about magnitude and uncertainty regarding \(P(rain|cloudy)\)?

Maybe, the conversation would be as follows:

YOU: What is the chance of rain, when it’s cloudy.

EXPERT: Wow, difficult question. I don’t have a definite answer.

YOU: Oh, c’mon. I just need a rough answer. Is it more like 50%-ish, or rather 70%-ish.

EXPERT: Hmm, maybe somewhere between 50 and 70%.

YOU: Then, I guess, taking an umbrella with me is the rational choice of action.

Note how the expert gave two endpoints for the parameter in question, to indicate the location and the level of uncertainty. If she had been more certain, she had said "between 55 and 65%. While this is better than nothing, it remains unclear, which level of uncertainty is enclosed. Is the expert 100%, 90% or just 50% sure the true chance is in the interval? Next time, you could ask as follows:

…

EXPERT: Hmm, maybe somewhere between 70-90%

YOU: What do you bet? I’m betting 5 EUR that the true parameter is outside the range you just gave.

EXPERT: I dare you! 95 EUR it’s inside!

The expert feels 95% certain, that the parameter in question is in the interval. However, for many questions of interest, we have no expert at hand (or we may not even trust them altogether). Then we proceed with option 3: making our own observations.

3.1.2 Benchmarking designs

The most basic decision in practical design research is whether a design fulfills an external criterion. External criteria for human performace in a human-machine system are most common, albeit not abundant, in safety-critical domains.

Consider Jane: she is user experience researcher at the mega-large rent-a-car company smartr.car. Jane was responsible for a overhaul of the customer interface for mobile users. Goal of the redesign was to streamline the user interface, which had grown wild over the years. Early customer studies indicated that the app needed a serious visual de-cluttering and stronger funneling of tasks. 300 person months went into the re-development and the team did well: a recent A/B study had shown that users learned the smartr.car v2.0 fast and could use its functionality very efficiently. Jane’s team is prepared for the roll-out, when Marketing comes up with the following request:

Marketing: We want to support market introduction with the following slogan: “rent a car in 99 seconds.”

Jane: Not all users manage a full transaction in that short time. That could be a lie.

Marketing: Legally, the claim is fine if it holds on average.

Jane: That I can find out for you.

Jane takes another look at the performance of users in the smartr car v2.0 condition. As she understands it, she has to find out whether the average of all recorded time-on-tasks with smartr.car 2.0 is 99 seconds, or better. Here is how the data looks like:

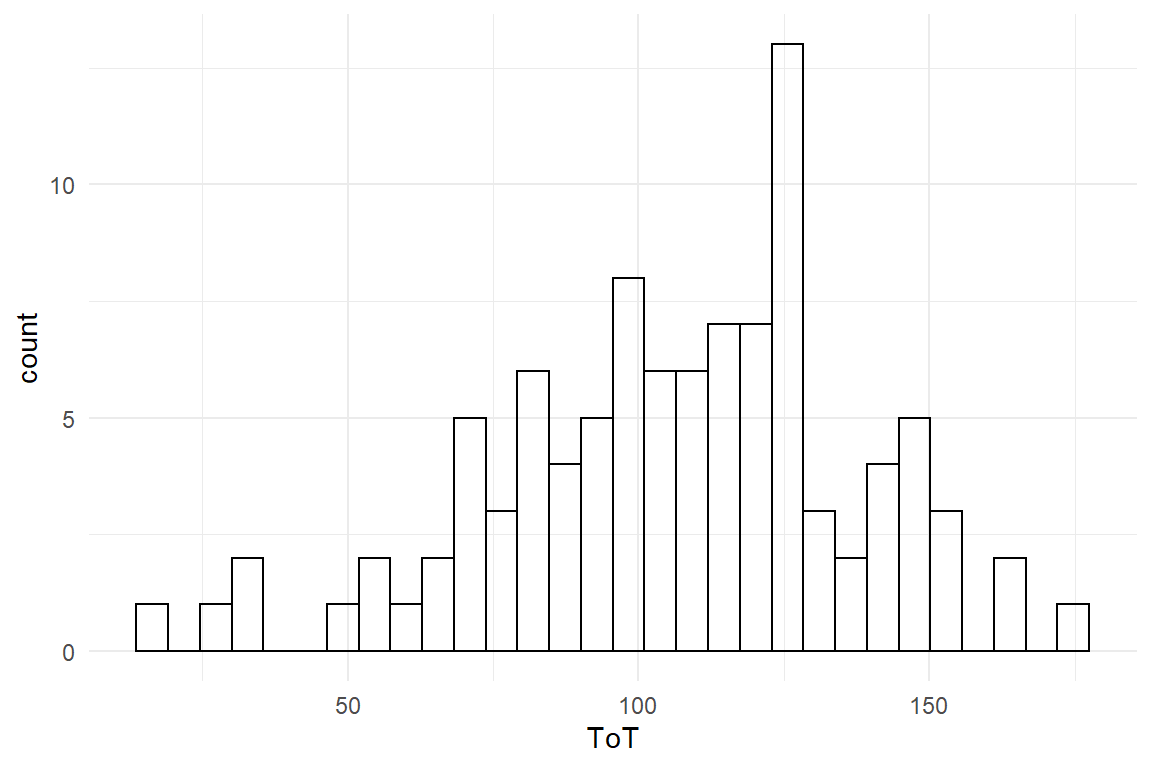

attach(Sec99)Ver20 %>%

ggplot(aes(x = ToT)) +

geom_histogram()

Figure 3.1: Distribution of ToT

The performance is not completely off the 99 seconds, many users are even faster. Jane figures out that she has to ask a more precise question, first, as teh slogan can mean different things, like:

- all users can do it within 99 seconds

- at least one user can do it

- half of the users can do it

Jane decides to go the middle way and chooses the population average, hence the average ToT must not be more than 99 seconds. Unfortunately, she had only tested a small minority of users and therefore cannot be certain about the true average:

mean(Ver20$ToT)## [1] 106Because the sample average is an uncertain, Jane is afraid, Marketing could use this as an argument to ignore the data and go with the claim. Jane sees no better way as quantifying the chance of being wrong using a statistical model, which will learn to know as the Grand Mean Model (4.1). The following code estimates the model and shows the estimated coefficient (Table 3.6).

M_1 <-

Ver20 %>%

stan_glm(ToT ~ 1, data = .)

P_1 <-

posterior(M_1)coef(P_1)| model | parameter | type | fixef | center | lower | upper |

|---|---|---|---|---|---|---|

| M_1 | Intercept | fixef | Intercept | 106 | 99.8 | 112 |

Let’s see what the GMM reports about the population average and its uncertainty: The table above is called a CLU table, because it reports three estimates per coefficient:

- Center, which (approximately) is the most likely position of the true value

- Lower, which is the lower 95% credibility limit. There is a 2.5% chance that the true value is lower

- Upper, the 95% upper limit. The true value is larger than this with a chance of 2.5%.

This tell Jane that most likely the average time-on-task is \(Intercept\). That is not very promising, and it is worse: 99 is even below the lower 95% credibility limit. So, Jane can send a strong message: The probability that this claim is justified, is smaller than 2.5%.

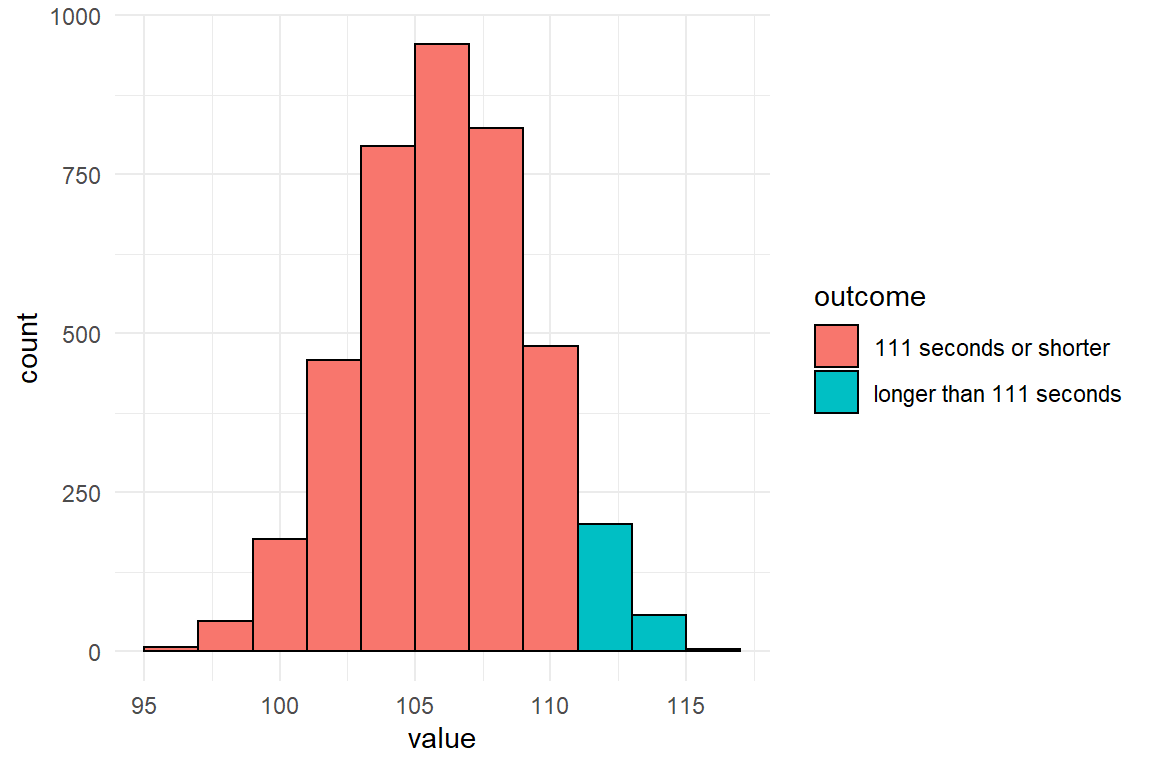

Luckily, Jane had the idea that the slogan could be changed to “rent a card in 1-1-1 seconds”. The 95% credibility limits are in her favor, since 111 is at the upper end of the credibility limit. It would be allowed to say that the probability to err is not much smaller than 2.5%. But Jane desires to make an accurate statement. But what precisely is the chance that the true population average is 111 or lower? In Bayesian analysis there is a solution to that. When estimating such a model, we get the complete distribution of certainty, called the posterior distribution. In fact, a CLU table with 95% credibility limits is just a summary on the posterior distribution. This distribution is not given as a function, but has been generated by a (finite) random walk algorithm, known as Markov-Chain Monte Carlo. At every step (or most, to be precise), this algorithm jumps to another set of coordinates in parameter space and a frequency distribution arises that can be used to approximate levels of certainty. Figure 3.2 shows the result of the MCMC estimation as a frequency distribution (based on 4000 iterations in total), divided into the two possible outcomes.

P_1 %>%

filter(parameter == "Intercept") %>%

mutate(outcome = ifelse(value <= 111,

"111 seconds or shorter",

"longer than 111 seconds"

)) %>%

ggplot(aes(

x = value,

fill = outcome

)) +

geom_histogram(binwidth = 2)

Figure 3.2: A histogram of MCMC results split by the 111-seconds criterion

In a similar manner to how the graph above was produced, a precise certainty level can be estimated from the MCMC frequency distribution contained in the posterior object. Table 3.7 shows that the 111 seconds holds with much better certainty.

P_1 %>%

filter(parameter == "Intercept") %>%

summarise(certainty = mean(value <= 111)) | certainty |

|---|

| 0.935 |

The story of Jane is about decision making under risk and under uncertainty. We have seen how easily precise statements on uncertainty can be derived from a statistical model. But regarding rational decision making, this is not an ideal story: What is missing is a systematic analysis of losses (and wins). The benefit of going with the slogan has never been quantified. How many new customers it will really attract and how much they will spend cannot really be known upfront. Let alone, predicting the chance to loose in court and what this costs are almost unintelligeable. The question must be allowed, what good is the formula for utility, when it is practically impossible to determine the losses. And if we cannot estimate utilities, what are the certainties good for?

Sometimes, one possible outcome is just so bad, that the only thing that practically matters, is to avoid it at any costs. Loosing a legal battle often falls into this category and the strategy of Marketing/Jane effectively reduced this risk: they dismissed a risky action, the 99 seconds statement, and replaced it with a slogan that they can prove is true with good certainty.

In general, we can be sure that there is at least some implicit calculation of utilities going on in the minds of Marketing. Perhaps, that is a truly intuitive process, which is felt as an emotional struggle between the fear of telling the untruth and love for the slogan. This utility analysis probably is inaccurate, but that does not mean it is completely misleading. A rough guess always beats complete ignorance, especially when you know about the attached uncertainty. Decision-makers tend to be pre-judiced, but even then probabilities can help find out to what extent this is the case: Just tell the probabilities and see who listens.

3.1.3 Comparison of designs

The design of systems can be conceived as a choice between design options. For example, when designing an informational website, you have the choice of making the navigation structure flat or deep. Your choice will change usability of the website, hence your customer satisfaction, rate of recurrence, revenue etc. Much practical design research aims at making good choices and from a statistical perspective that means to compare the outcomes of two (or more) design option. A typical situation is to compare a redesign with its predecessor, which will now be illustrated by a hypothetical case:

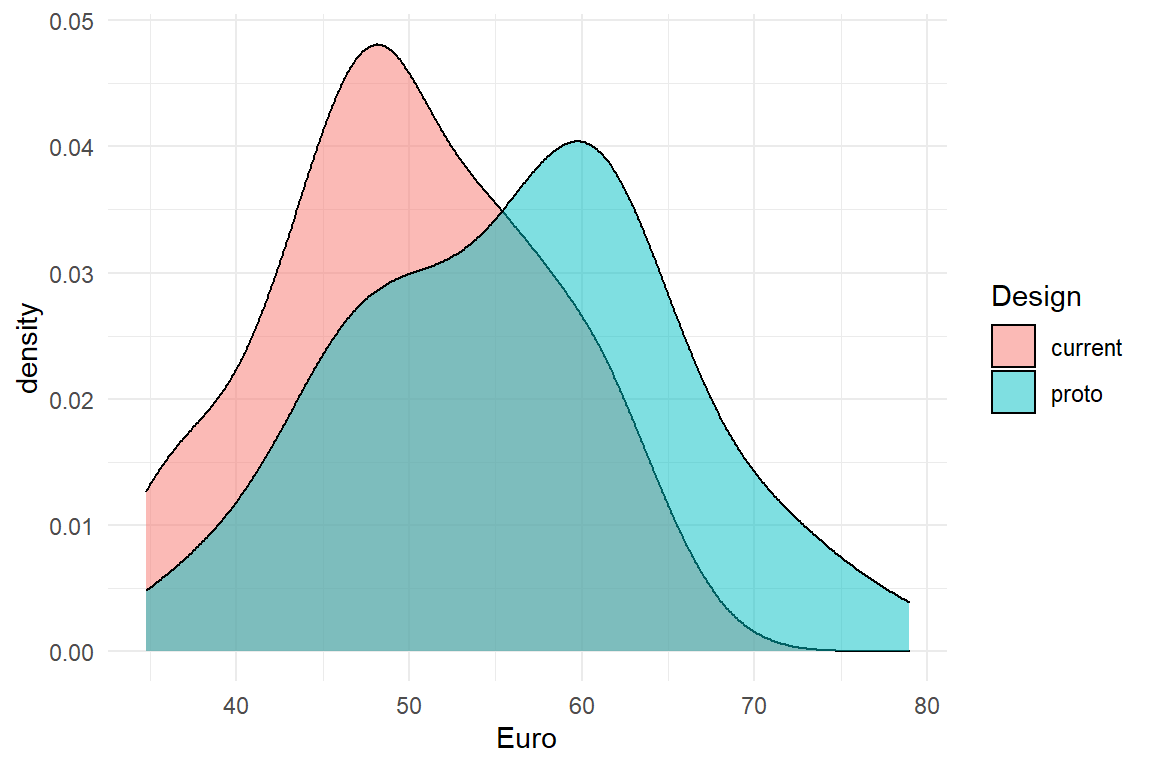

Violet is a manager of an e-commerce website and at present, a major overhaul of the website is under debate. The management team agrees that this overhaul is about time and will most likely increase the revenue per existing customer. Still, there are considerable development costs involved and the question arises whether the update will pay itself in a reasonable time frame. To answer this question, it is not enough to know that revenues increase, but an more accurate prediction of how much precisely is gained. In order to return the investment, the increase must be in the ballpark of 10% increase in revenue. For this purpose, Violet carries out a user study to compare the two designs. Essentially, she observes the transactions of 50 random customers using the current system with 50 transactions with a prototype of the new design. The measured variable is the money every user spends during the visit. The research question is: By how much do revenues increase in the prototype condition?. Figure 3.3 shows the distribution of measured revenue in the experiment.

attach(Rational)RD %>%

ggplot(aes(x = Euro, fill = Design)) +

geom_density(alpha = .5)

Figure 3.3: Density plot comparing the revenue of two designs

There seems to be a slight benefit for the prototype condition. But, is it a 10% increase? The following calculation shows Violet that it could be the case (Table 3.8)

RD %>%

group_by(Design) %>%

summarize(mean_revenue = mean(Euro)) | Design | mean_revenue |

|---|---|

| current | 49.9 |

| proto | 56.3 |

Like in the previous case, testing only a sample of 100 users out of the whole population leaves room for uncertainty. So, how certain can Violet be? A statistical model can give a more complete answer, covering the magnitude of the improvement, as well as a level of certainty. Violet estimates a model that compares the means of the two conditions 4.3.1, assuming that the randomness in follows a Gamma distribution 7.3.1.

library(rstanarm)

library(tidyverse)

library(bayr)

M_1 <-

RD %>%

stan_glm(Euro ~ Design,

family = Gamma(link = "log"),

data = .

)

P_1 <- posterior(M_1)The coefficients of the Gamma model are on a logarithmic scale, but when exponentiated, they can directly be interpreted as multiplications 7.2.1.1. That precisely matches the research question, which is stated as percentage increase, rather than a difference (Table 3.9).

coef(P_1, mean.func = exp)| parameter | fixef | center | lower | upper |

|---|---|---|---|---|

| Intercept | Intercept | 49.90 | 46.99 | 53.05 |

| Designproto | Designproto | 1.13 | 1.04 | 1.23 |

The results tell Violet that, most likely, the average user spends 49.904 Euro with the current design. The prototype seems to increase the revenue per transaction by a factor of \(1.127\). That would be a sufficient increase, however this estimate comes from a small sample of users and there remains a considerable risk, that the true improvement factor is much weaker (or stronger). The above coefficient table tells that with a certainty of 95% the true value lies between \(1.039\) and \(1.225\). But, what precisely is the risk of the true value being lower than 1.1? This information can be extracted from the model (or the posterior distribution):

N_risk_of_failure <-

P_1 %>%

filter(parameter == "Designproto") %>%

summarize(risk_of_failure = mean(exp(value) < 1.1))

paste0("risk of failure is ", c(N_risk_of_failure))## [1] "risk of failure is 0.28425"So, risk of failure is just below 30%. With this information in mind Violet now has several options:

- deciding that 28.425% is a risk she dares to take and recommend going forward with the development

- continue testing more users to reach a higher level of certainty

- improve the model by taking into account sources of evidence from the past, i.e. prior knowledge

3.1.4 Prior knowledge

It is rarely the case that we encounter a situation as a blank slate. Whether we are correct or not, when we look at the sky in the morning, we have some expectations on how likely there will be rain. We also take into account the season and the region and even the very planet is sometimes taken into account: the Pathfinder probe carried a bag of high-tech gadgets to planet Mars. However, the included umbrella was for safe landing only, not to cover from precipitation, as Mars is a dry planet.

In most behavioral research it still is the standard that every experiment had to be judged on the produced data alone. For the sake of objectivity, researchers were not allowed to take into account previous results, let alone their personal opinion. In Bayesian statistics, you have the choice. You can make use of external knowledge, but you don’t have to.

Violet, the rational design researcher has been designing and testing e-commerce systems for many years and has supervised several large scale roll-outs. So the current project is not a new situation, at all. From the top of her head, Violet produces the following table to capture her past twenty projects and the increase in revenue that had been recorded afterwards.

attach(Rational)D_prior| Obs | project | revenue_increase |

|---|---|---|

| 2 | 2 | 1.016 |

| 6 | 6 | 1.014 |

| 8 | 8 | 1.003 |

| 9 | 9 | 1.072 |

| 13 | 13 | 0.998 |

| 14 | 14 | 0.979 |

| 17 | 17 | 1.037 |

| 19 | 19 | 1.041 |

On this data set, Violet estimates another grand mean model that essentially captures prior knowledge about revenue increases after redesign:

M_prior <-

D_prior %>%

stan_glm(revenue_increase ~ 1,

family = Gamma(link = "log"),

data = .,

iter = 5000

)

P_prior <- posterior(M_prior)Note that the above model is a so called Generalized Linear Model with a Gamma shape of randomness, which will be explained more deeply in chapter 7.3.

Table 3.11 shows the results. The mean increase was Intercept and without any further information, this is the best guess for revenue increase in any future projects (of Violet). A statistical model that is based on such a small number of observations usually produces very uncertain estimates, which is why the 95% credibility limits are wide. There even remains a considerable risk that a project results in a decrease of revenue, although that has never been recorded. (In Gamma models coefficients are usually multiplicative, so a coefficient \(<1\) is a decline).

coef(P_prior, mean.func = exp)| model | parameter | type | fixef | center | lower | upper |

|---|---|---|---|---|---|---|

| M_prior | Intercept | fixef | Intercept | 1.02 | 0.882 | 1.18 |

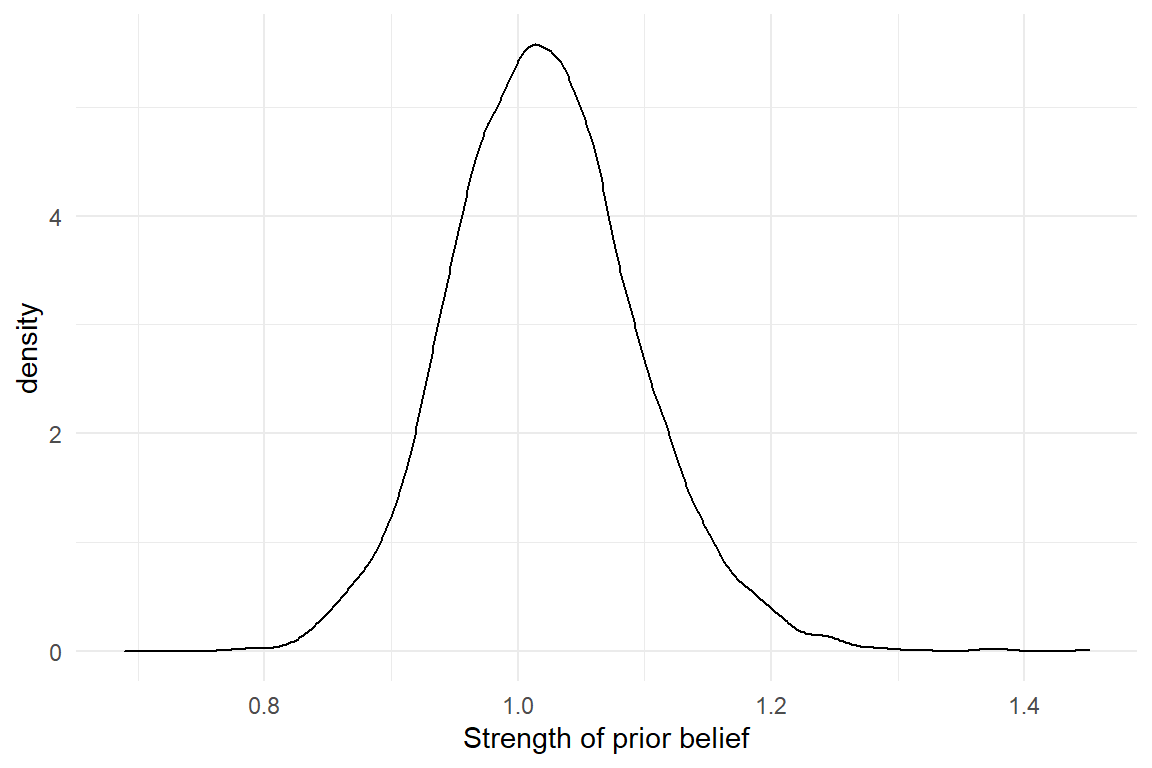

Or graphically, we can depict the belief as in Figure 3.4:

P_prior %>%

filter(parameter == "Intercept") %>%

mutate(value = exp(value)) %>%

ggplot(aes(x = value)) +

geom_density() +

xlab("Strength of prior belief")

Figure 3.4: Prior knowledge about a parameter is expressed as a distribution

The population average (of projects) is less favorable than what Violet saw in her present experiment. If the estimated revenue on the experimental data is correct, it would be a rather extreme outcome. And that is a potential problem, because extreme outcomes are rare. Possibly, the present results are overly optimistic (which can happen by chance) and do not represent the true change revenue, i.e. on the whole population of users. In Bayesian Statistics, mixing present results with prior knowledge is a standard procedure to correct this problem. In the following step, she uses the (posterior) certainty from M_prior and employs it as prior information (by means of a Gaussian distribution). Model M_2 has the same formula as M_1 before, but combines the information of both sources, data and prior certainty.

T_prior <-

P_prior %>%

filter(parameter == "Intercept") %>%

summarize(mean = mean(value), sd = sd(value))

M_2 <-

stan_glm(

formula = Euro ~ Design,

prior_intercept = normal(0, 100),

prior = normal(T_prior[[1, 1]], T_prior[[1, 2]]),

family = Gamma(link = "log"),

data = RD

)

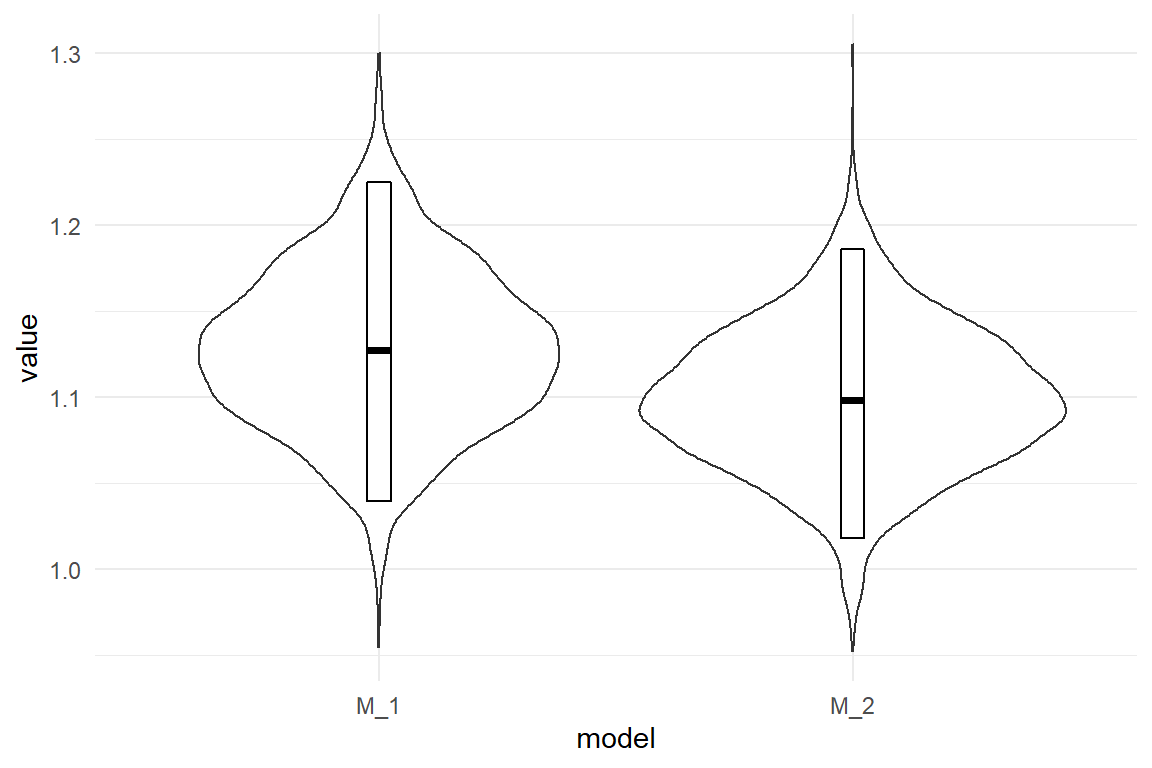

P_2 <- posterior(M_2)Note that the standard deviation here is saying how the strength of belief is distributed for the average revenue. It is not the standard deviation in the a population of projects. Table 3.12 and Figure 3.5 show a comparison of the models with and without prior information.

| model | parameter | center | lower | upper |

|---|---|---|---|---|

| M_1 | Designproto | 1.13 | 1.04 | 1.23 |

| M_2 | Designproto | 1.10 | 1.02 | 1.19 |

P_comb %>%

ggplot(aes(x = model, y = value)) +

geom_violin() +

geom_crossbar(

data = coef(P_comb),

aes(y = center, ymin = lower, ymax = upper),

width = .05

)

Figure 3.5: Comparison of expected revenue increase with and without prior information

Model M_2 reduces the estimated expected revenue by a small amount. But, remember that Violet has to meet the criterion of 110% in revenue. In the following she extracts the risk of failure (revenue smaller than 110%) from the posterior distribution (Table 3.13) .

P_comb %>%

mutate(outcome = value < 1.1) %>%

group_by(model) %>%

summarize(risk_to_fail = mean(outcome)) | model | risk_to_fail |

|---|---|

| M_1 | 0.284 |

| M_2 | 0.512 |

So what should Violet report to decision makers? Something like this: “The data from the study us that our chance of success is around two-thirds. However, in my long career I have never actually reached such an increase in revenue, so I’d rather say, chance of success is more around 50%.”

3.2 Observations and measures

The statistical models presented in this book have in common, that there is exactly one measure, which we call the outcome. In design research, outcome variables directly represent the current value of a design, or an aspect of it. This section provides an overview on the types of outcome variables.

3.2.1 Interaction sequences

A general concept in design is to think of purposes as user tasks. A user task can be defined as an initial state, a desired outcome and a procedure to get there. The knowledge required to follow the procedure can be found in two major locations: the users mind (mental model) and the interface (design model). A principle of user-centered design is to create a design model that matches the users current representation of the task, or easily enters the users mind, at least. The first is called intuitiveness of a design, the latter ease-of-learning.

A highly intuitive design matches the users mental model, and can be used out-of-the-box, without the requirement to instruct or train a user. Intuitiveness relies on the match between design and mental model. Whereas the design model is explicit, the mental model needs to be elicited from the users mind. Eliciting procedural mental models is difficult. You may think it is sufficient to just ask users about the idea of a procedure, but that is a limited approach. If I ask you about the precise steps in preparing a cup of coffee in your kitchen, that probably is a more demanding and error-prone than to ask you to prepare an actual cup of coffee. Two reasons make up for this discrepancy:

- The human mind has separate facilities for procedural knowledge and declarative knowledge. If I ask you about a procedure, you first have to make a translation.

- The more automated a task is, the less available it is to your conscious mind.

Our ability to verbalize our actions are limited. If there were no such verbalization limit, designing for tasks would be simple: just ask! Only mind-reading is more convenient. In user-centered design verbal inquiries are a central method. But, results from user interviews can be incomplete or biased.

An example for incompleteness is that users sometimes fail to report what they think is obvious. For example, in modern computer desktop applications a function to undo a step is standard and probably it is one of the most frequently used functions. In other types of systems, for example data-base driven information systems, implementing an Undo function is non-trivial, because data base records can be changed by multiple users. It can be implemented by so-called roll-back mechanisms, but these reduce performance and storage capacity of the system. When collecting user requirements for such a system, the developer has little interest in Undo, whereas the user has a huge interest, but does not mention it. If that is not resolved during the design process, there will be trouble.

Verbal reports of action sequences are often incomplete. But, if there is something even worse, then it is the limited ability of humans to imagine new situations, or novel ways of doing something. Imagine you had a time machine and you would travel back to the year 1981, which is a year before the series Knight Rider went on broadcast. If you would ask car drivers from that era, how they would envision a navigation assistant device, you would probably end up with something that looks more like a Star Trek computer console than with anything comparable to modern navigation device.

This why one central principle of user-centered design is the direct observation of users. By directly observing how a person prepares a cup of coffee, we can learn about the details of behavior and close the verbalization gap. By observing many action sequences, we can carve out detailed user requirements, or fix an existing design. Observing user interactions does not require one-way mirrors and nights of video coding. Because of the verbalization gap, a lot can be learned just by watching over the users shoulder. The method of think-aloud usability testing even goes one step further and combines behavior observation with verbalization.

Interaction sequences can sometimes be collected without a human observer. Log files of web servers provide sequences of users navigating web site. Plugin software is available that records keystrokes and mouse actions on computers. The difficult part is the following: When observing 50 users while doing a non-trivial task, no two interaction sequences are exactly the same (if i had to bet on it). By itself, there is little value without further means of interpretation and this can go two ways up: qualitative and quantitative.

The qualitative design researcher will collect interaction sequences, as well as verbal reports and shuffle them around until an intelligible picture emerges. One way to describe the purpose of user testing is to find out all possible ways how things can go wrong. Every break-down that is observed at least once in a sample of test users, tells a story of what may happen to hundreds or thousands of users in the future. That is why in early development phases, qualitative research rules.

The quantitative researcher will aim at deriving measures from the interaction sequence. Formally, to measure means assigning numbers to observed sequences, so that these can be brought into an order, at least. If the original data is qualitative, we need some method of transformation that gives us measures.

Sometimes, you have to go a long way up the qualitative route, before you can derive useful measures. In (Schnittker et al. 2016) we coded sequences from nurses using an infusion pump. Individual sequences were compared to a optimal reference path. The closer a user stays on this path, the better. But how to measure similarity? We used the Levensthein distance, which takes two sequences, the optimal patzh and the observed path and determines the minimum number of edits to transform one sequence into the other. This results in a score for error proneness, the deviation from optimal path.

Another example is a study we did on the active user paradox. We recorded interaction sequences of users doing editing tasks with a graphics software. The sessions were recorded and analysed using a behavioural coding scheme. First, events were classified on a low level (e.g. “reading the handbook,” “first time trying a function”) and later aggregated to broader classes (e.g. “exploratory behavior”). The number of exploratory events then served as a measure for the exploratory tendencies of the participant.

3.2.2 Performance measures

User errors are qualitative by nature and need a transformation to be used as outcome variables. Other aspects of performance can be measured directly. A useful framework for outcome measures is the classic concept of usability, which the ISO 9142-11 defines by the following three high-level criteria:

- effectiveness: can users accomplish their tasks?

- efficiency: what resources have to be spend for a task, e.g. time.

- satisfaction: how did the user like it?

While these criteria originated in the field of Human-Computer Interaction, they can easily be adapted to compare everything that people do their work with. Even within the field, it has been adapted to hundreds of studies and a hundred ways are reported of assessing these criteria.

Effectiveness is often measured by completion rate (CR). A classic waterfall approach would be to consult the user requirements documents and identify the, let’s say eight, crucial tasks the system must support. User test might then show that most users fail at the two tasks, and a completion rate of 75% is recorded for the system. Completion rate is only a valid effectiveness measure with distinct tasks. Strictly, the set of tasks also had to be complete, covering the whole system. When completion rate is taken from in series of repetitive tasks, it depends on whether it is effectiveness or efficiency. It is effectiveness, when a failed operation is unrepairable, such as a traffic accident, data loss on a computer or medical errors. But, who cares for a single number between 0 and 1, when the user test provides such a wealth of information on why users failed? Effectiveness, in the sense of task completion, is primarily a qualitative issue and we shall rarely encounter it in this book.

A more subtle notion of effectiveness is the quality of outcome, and despite the very term, it is a measure. (Perhaps, it should better be called level of perfection.) Reconsider the AUP study, where participants had to modify a given graphic, e.g. change some colors and erase parts of it. Of course, some participants worked neatly, whereas others used broader strokes (literally). There are several ways to rank all results by quality and thereby create an outcome variable.

Efficiency is where it really gets quantitative as we ask about resources: time, attention, strain, distraction and Euros. Efficiency can be measured in a variety of ways: time-on-task (ToT), clicks, mental workload or time spent watching the traffic while driving.

Counting the number of clicks before someone has found the desired piece of information is a coarse, but easy to acquire and intuitive measure of efficiency, and so is time-on-task (ToT).

ToT and other performance measures can be very noisy. The general recipe to reduce noise and improve certainty is to collect more data. The obvious way of doing this is by increasing the sample size, but that can be costly. A more efficient way to reduce uncertainty with a given sample is to collect more data per participant, which is called repeated measures. Chapter 6 will introduce models that deal with repeated measures in a straight-forward way.

Counting steps-to-completion or errors often requires the laborous process of interaction analysis. Measures of duration are much easier to obtain and in many situations they are spot-on: In usability studies, time-on-task is a direct measure of efficiency. When controlling a car in dense city traffic, a few hundred milliseconds is what makes huge a difference and therefore reaction time is a valid performance measure. In experimental cognitive research reaction times have been succesfully used in countless experiments to reveal the structure of the mind.

3.2.3 Satisfaction and other feelings

The standard definition of usability defines three levels of usability, the two performance-related effectiveness and efficiency, and satisfaction. The latter has long been held the enfant terrible of the three. That’s not a big surprise, as satisfaction is about the feelings of users.

Early research in the 1990s took enough with a few rating scales, that measure satisfaction, as the absence of negative feelings. Later, when User Experience era took off, a wave of constructs and measures washed over the research community, here are just a few examples:

- beauty

- hedonic quality

- coolness

- credibility

- meaning of life

Feelings are a tricky business when it comes to measuring them. Most frequently, rating scales are employed, and often the same author makes the remark, that the study should be replicated with more objective measures for emotional states, such as physiological measures or implicit measures. However, I haven’t seen many studies where such measures produced good results.

Despite all criticism, self-report rating scales are still the primary method of measuring feelings towards designs. Rating scale instruments exist for a variety of concepts, but they come in only a few forms: first, there exist single item or multi-item scales. Single-item instruments are the hydrogen of rating scales. Because they are so short, they cause little interruption and are therefore useful for obtaining repeated measures over time. That is very valuable, when the goal is to track feelings of an extended period of time. For example, the effect of learning can be seen in a decline of cognitive workload over time. From a methodological point-of-view, single-item scales are inferior, because many quality checks rest on having multiple items (see 6.8).

Multi-item rating scales are the standard. Participants or users respond to a number of statements, that have an overarching theme. In contrast to single items, multi-item scales are amenable to a number of procedures for quality check, such as reliability. Whereas a psychometric assessment requires the data on item level, for the purpose of design eveluation multi-item ratings are often aggregated total scores, such as the average score. However, aggregation always causes a loss in information and with the multi-level models presented in 6.8, this is also no longer required.

Next to the number of items, rating scale instruments differ in cardinality of the response, which is the number of possible responses to an item. By far most rating scales use between four and nine ordered bins. In contrast, so called visual analog rating scales measure on a continuum. This can be done on paper, using a ruler to translate the response into numbers, or using a slider bar in a computer program.

Finally, rating scales have either unipolar or bipolar anchoring. Many rating scales put labels (instead of just numbers) on minimum and the maximum bins. With a unipolar item, the left anchor is neutral, whereas the right is not, like in:

0: Dull … 1: Bliss

Dull is not the opposite of bliss, it is the absence of it. (Just like Zero is not the opposite of One.) one example of a bipolar scales is:

-1: Ugly … + 1: Beautiful

Another consideration with binned (rather than continuous) bipolar scales. If such a scale has an uneven number, the center bin is neutral.

-1:Ugly … 0:Neutral … +1:Beautifuly

Sometimes, it is useful that participants have the opportunity for a neutral answer, for example, when the participant may just not know:

The help pages of the program are written in a clear language.

-1: Not at all … 0: I didn’t notice +1: … Totally

Especially with long questionnaires, it can happen that participants are getting bored an just always respond neutral. In such a case, an even number of bins, i.e. the absence of a neutral response, forces participants to make a choice of direction, at least.

What kind of rating scale you use has consequences for your statistical model. As we will see in section 6.8, multi-level models are a good match for multi-item rating scales. What also matters for the choice of model is whether you have been using a visual analog scale or a binned scale. Data from visual analog scales is more easily treated, by either a Gaussian 6.8 or a Beta linearized model 7.4.2. Both are relatively lean methods and easy to report. For binned rating scales the complicated beast called ordinal logistic regression 7.4.1 applies. My advice would be to use visual analog scales, whenever possible, even if this means to not exactly following the original instructions for the scale.

While rating scales are prevalent for measuring feelings, that does not mean there are no other, more objective, ways. There has been some buzz about physiological measures, like galvanic skin response or EEG, lately. In our own lab, I have seen these measures fail more often than succeed for the evaluation of products (such as wine or floor cleaning robots).

Implicit measures are means to assess certain attitudes or feelings by means of experimental setups. For example, We once tried to measure technophile attitude (geekism) using a variant of the classic Stroop task. In (Schmettow, Noordzij, and Mundt 2013) we showed pictures of computers to a samnple of students, followed by the Stroop task, which means that participants had to name the ink color of a color-printed word (e.g., “explore” in color Red). It was conceived that reaction time increases when a participant experiences a strong association, like how good it felt to build a computer all by yourself. The initial success was soon washed away by a failure to reproduce these results. Another implicit method sometimes proposed is the Approach-Avoidance task, which has gotten some merits in research on addiction and racism. In simple terms, participants (or users) are asked to push or pull a joystick and it seems that they pull faster, when they see something they like (a bottle of booze) and push faster when they dislike what they see (a photograph of war victims). However, I have seen this approach failing to produce relevant results in a design comparison experiment. Generally, such experiments produce reaction time differences below the 100ms mark and therefore many trials are needed to carve out any differences. At the same time, I have doubts that the emotional reactions towards, say computer interfaces, play in the same league as the stimuli used in research on addiction or racism research. Emotional responses in design research may just be too mellow to disturb cognitive processes with a strength that is measurable.

3.3 Descriptive statistics

In empirical research we systematically gather observations. Observations of the same kind are usually subsumed as variables. A set of variables that have been gathered on the same sample are called a data set, which typically is a table with variables in columns. In the most general meaning, a statistic is a single number that somehow represents relevant features of a data set, such as:

- frequency: how many measures of a certain kind can be found in the data set?

- mean: do measures tend to be located left (weak) or right (strong) on a scale?

- variance: are measures close together or widely distributed along the scale?

- association: does one variable X tend to change when another variable Y changes?

3.3.1 Frequencies

attach(Sec99)The most basic statistics of all probably is the number of observations on a variable \(x\), usually denoted by \(n_{x}\). The number of observations is a rough indicator for the amount of data that has been gathered. In turn, more data usually results in better accuracy of statistics and higher levels of certainty can be reached.

nrow(Ver20$ToT)## NULLThe number of observations is not as trivial as it may appear at first. In particular, it is usually not the same as the sample size, for two reasons: First, most studies employ repeated measures to some extent. You may have invited \(N_\textrm{Part} = 20\) participants to your lab, but each participant is tested on, let’s say, \(N_\textrm{Task} = 5\) tasks, the number of observations is \(N_\textrm{Obs} = N_\textrm{Part}N_\textrm{Task} = 100\). Second, taking a valid measure can always fail for a variety of reasons, resulting in missing values (NA). For example, in the 99 seconds study, it has happened, that a few participants missed to fill in their age on the intake form. The researcher is left with fewer measures of age \(n_{age}\) than there were participants.

N_obs <- function(x) sum(!is.na(x))

N_obs(Ver20$age)## [1] 100Another important issue is the distribution of observations across groups (Table 3.14) . Again, the number of observations in a group is linked to the certainty we can gain on statistics of that group. Furthermore, it is sometimes important to have the distribution match the proportions in the population, as otherwise biases may occur.

Ver20 %>%

group_by(Gender) %>%

summarize(n()) | Gender | n() |

|---|---|

| female | 59 |

| male | 41 |

The table above shows so called absolute frequencies. Often, we have to compare frequencies of two groups of different size, it often is more appropriate to report relative frequencies or proportions:

n_Gender <- N_obs(Ver20$Gender)

Ver20 %>%

group_by(Gender) %>%

summarize(rel_freq = n() / n_Gender) | Gender | rel_freq |

|---|---|

| female | 0.59 |

| male | 0.41 |

Summarizing frequencies of metric measures, such as time-on-task (ToT) or number of errors is useful, too. However, a complication arises by the fact that continuous measures do not naturally fall into groups. Especially in duration measures no two measures are exactly the same.

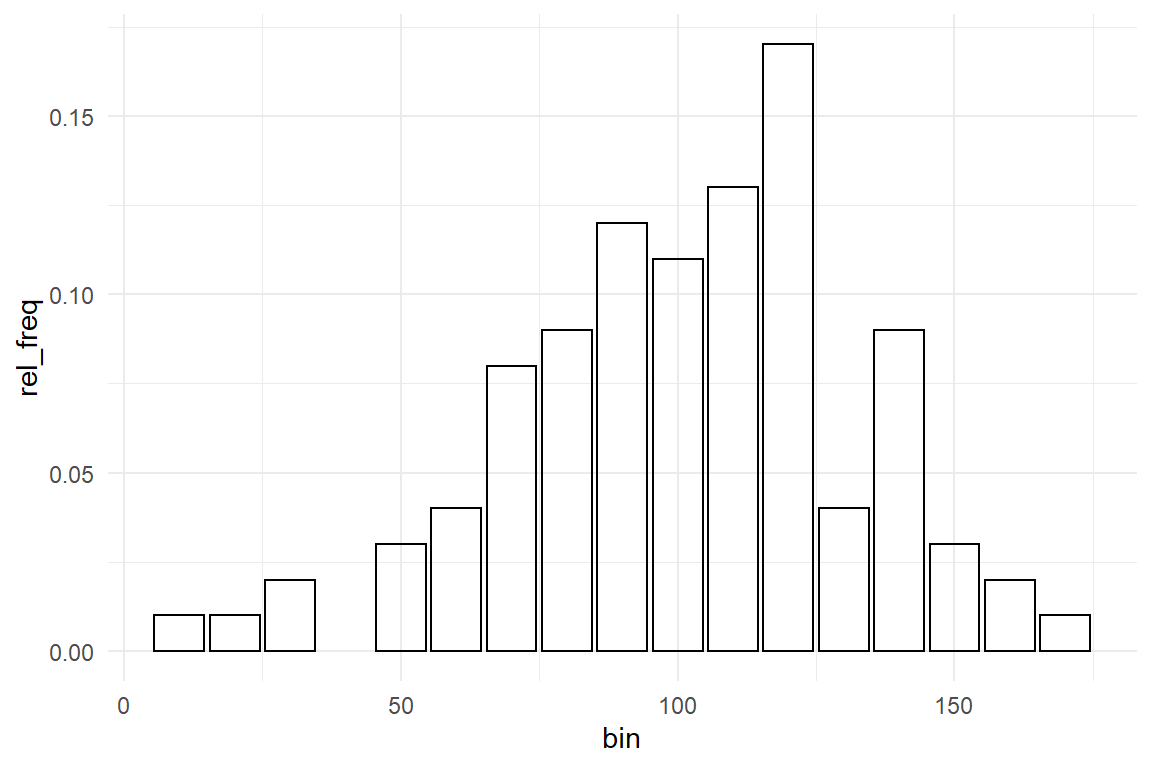

length(unique(Ver20$Gender))## [1] 2length(unique(Ver20$ToT))## [1] 100The answer to this problem is binning: the scale of measurement is divided into a number of adjacent sections, called bins, and all measures that fall into one bin are counted. For example, we could use bins of 10 seconds and assess whether the bin with values larger than 90 and smaller or equal to 100 is representative in that it contains a large proportion of values. If we put such a binned summary of frequencies into a graph, that is called a histogram.

bin <- function(x, bin_width = 10) floor(x / bin_width) * bin_width

n_ToT <- N_obs(Ver20$ToT)

Ver20 %>%

mutate(bin = bin(ToT)) %>%

group_by(bin) %>%

summarize(rel_freq = n() / n_ToT) %>%

ggplot(aes(x = bin, y = rel_freq)) +

geom_col()

Figure 3.6: Histogram showing relative frequencies

Strictly spoken, grouped and binned frequencies are not one statistic, but a vector of statistics. It approximates what we will later get to know more closely as a distribution 3.5.2.

3.3.2 Central tendency

Reconsider the rational design researcher Jane 3.1.2. When asked about whether users can complete a transaction within 99, she looked at the population average of her measures. The population average is what we call the (arithmetic) mean. The mean is computed by summing over all measures and divide by the number of observations. The mean is probably the most often used measure of central tendency, but two more are being used and have their own advantages: median and mode.

my_mean <- function(x) sum(x) / N_obs(x) ## not length()

my_mean(Ver20$ToT)## [1] 106Note that I am using function N_obs() from Section 3.3.1, not length(), to not accidentally count missing values (NA).

Imagine a competitor of the car rental company goes to court to fight the 99-seconds claim. Not an expert in juridical matters, my suggestion is that one of the first questions to be regarded in court probably is: what does “rent a car in 99 seconds” actually promise? One way would be the mean (“on average users can rent a car in 99 seconds”), but here are some other ways to interpret the same slogan:

“50% (or more) of users can ….” This is called the median. The median is computed by ordering all measures and identify the the element right in the center. If the number of observations is even, there is no one center value, and the mean of the center pair is used, instead.

my_median <- function(x) {

n <- length(x)

center <- (n + 1) %/% 2

if (n %% 2 == 1) {

sort(x, partial = center)[center]

} else {

mean(sort(x, partial = center + 0:1)[center + 0:1])

}

}

my_median(Ver20$ToT)Actually, the median is a special case of so called quantiles. Generally, an quantiles are based on the order of measures and an X% quantile is that value where X% of measures are equal to or smaller. The court could decide that 50% of users is too lenient as a criterion and could demand that 75% percent of users must complete the task within 99 seconds for the slogan to be considered valid.

quantile(Ver20$ToT, c(.50, .75))## 50% 75%

## 108 125A common pattern to be found in distributions of measures is that a majority of observations are clumped in the center region. The point of highest density of a distribution is called the mode. In other words: the mode is the region (or point) that is most likely to occur. For continuous measures this once again poses the problem that every value is unique. Sophisticated procedures exist to smooth over this inconvenience, but by the simple method of binning we can construct an approximation of the mode: just choose the center of the bin with highest frequency. This is just a crude approximation. Advanced algorithms for estimating modes can be found in the R package Modeest.

mode <- function(x, bin_width = 10) {

bins <- bin(x, bin_width)

bins[which.max(tabulate(match(x, bins)))] + bin_width / 2

}mode(Ver20$ToT)## [1] 145Ver20 %>%

group_by() %>%

summarize(

mean_ToT = mean(ToT),

median_ToT = median(ToT),

mode_ToT = mode(ToT)

) | mean_ToT | median_ToT | mode_ToT |

|---|---|---|

| 106 | 108 | 145 |

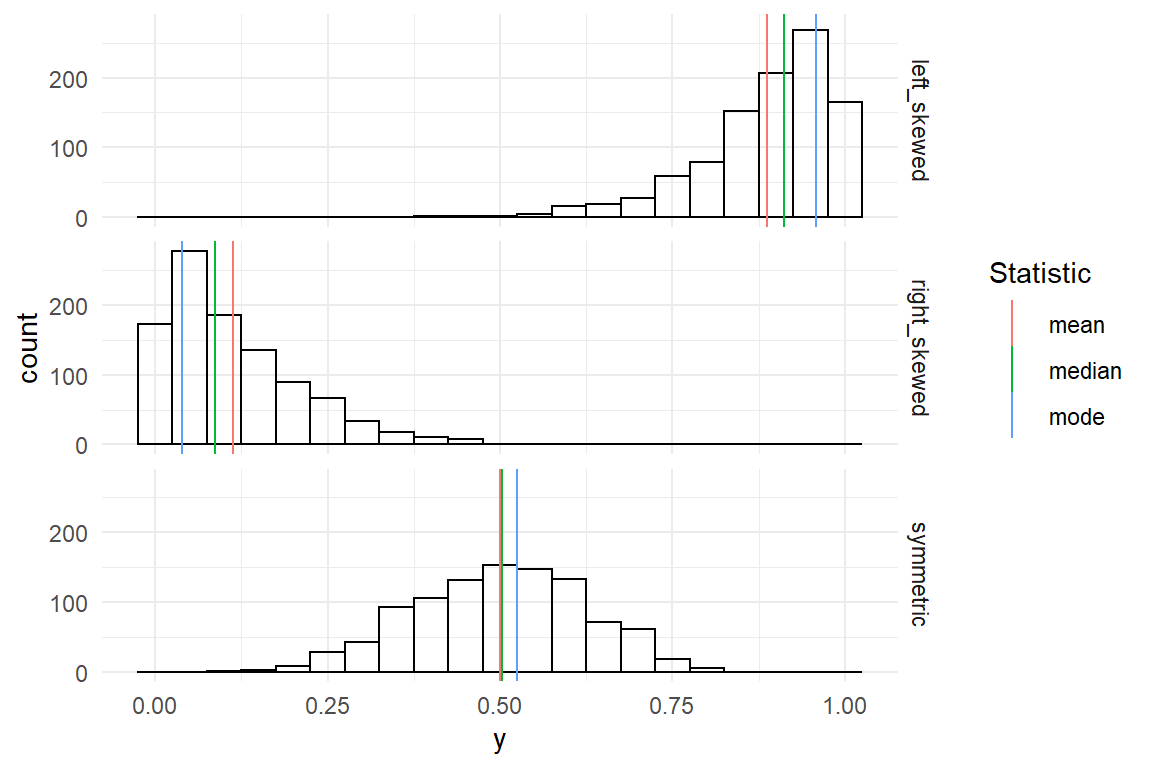

The table above shows the three statistics for central tendency side-by-side. Mean and median are close together. This is frequently the case, but not always. Only if a distribution of measures is completely symmetric, mean and median perfectly coincide. In section 3.5.2 we will encounter distributions that are not symmetric. The more a distribution is skewed, the stronger the difference between mean and median increases (Figure 3.7).

Figure 3.7: Left-skewed, right-skewed and symmetric distributions

To be more precise: for left skewed distributions the mean is strongly influenced by few, but extreme, values in the left tail of the distribution. The median only counts the number of observations to both sides and is not influenced by how extreme these values are. Therefore, it is located more to the right. The mode does not regard any values other than those in the densest region and just marks that peak. The same principles hold in reversed order for right-skewed distributions.

To summarize, the mean is the most frequently used measure of central tendency, one reason being that it is a so called sufficient statistic, meaning that it exploits the full information present in the data. The median is frequently used when extreme measures are a concern. The mode is the point in the distribution that is most typical.

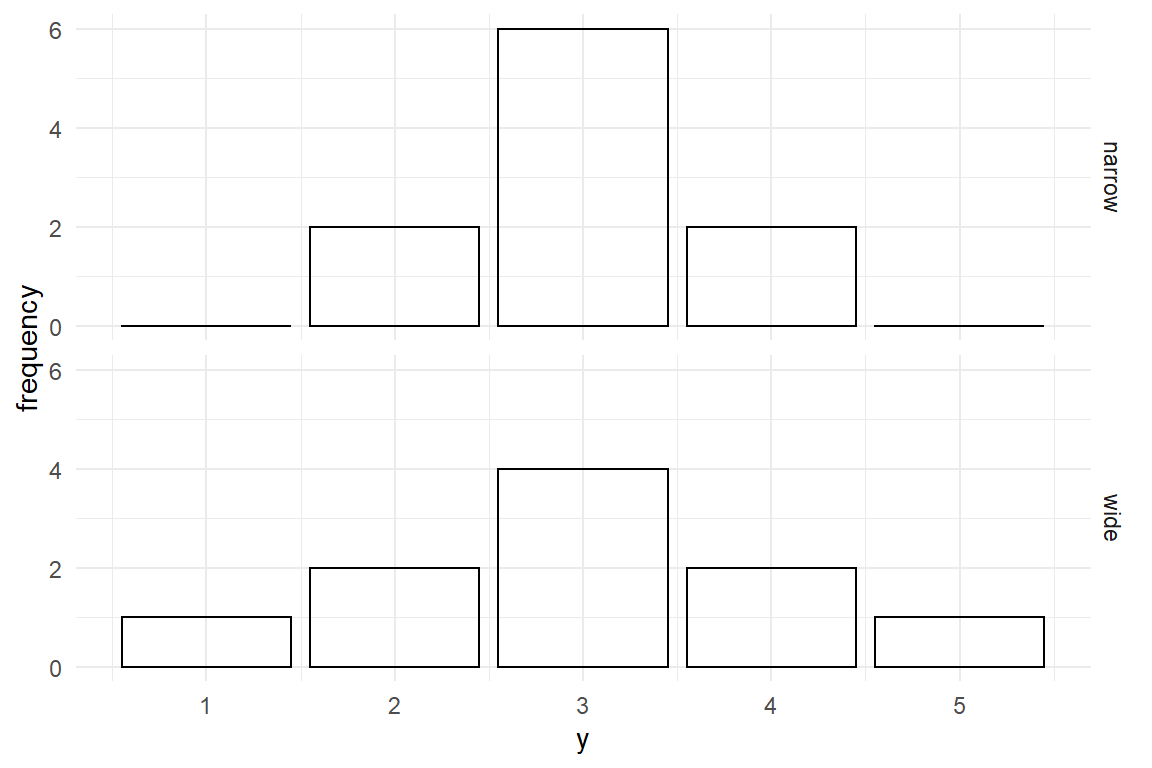

3.3.3 Dispersion

In a symmetric distribution with exactly one peak, mean and mode coincide and the mean represents the most typical value. But, a value being more typical does not mean it is very typical. That depends on how the measures are dispersed over the whole range. In the figure below, the center value of the narrow distribution contains 60% of all measures, as compared to 40% in the wide distribution, and is therefore more representative (Figure 3.8) .

D_disp <-

tribble(

~y, ~narrow, ~wide,

1, 0, 1,

2, 2, 2,

3, 6, 4,

4, 2, 2,

5, 0, 1

) %>%

gather(Distribution, frequency, -y)

D_disp %>%

ggplot(aes(

x = y,

y = frequency

)) +

facet_grid(Distribution ~ .) +

geom_col()

Figure 3.8: Narrow and wide distributions

A very basic way to describe dispersion of a distribution is to report the range between the two extreme values, minimum and maximum. These are easily computed by sorting all values and selecting the first and the last element. Coincidentally, they are also special cases of quantiles, namely the 0% and 100% quantiles.

A boxplot is a commonly used geometry to examine the shape of dispersion. Similar to histograms, boxplots use a binning mechanism and are useful for continuous measures. Whereas histograms use equidistant bins on the scale of measurement, boxplots create four bins based on 25% quantile steps (Figure 3.9). . These are also called quartiles.

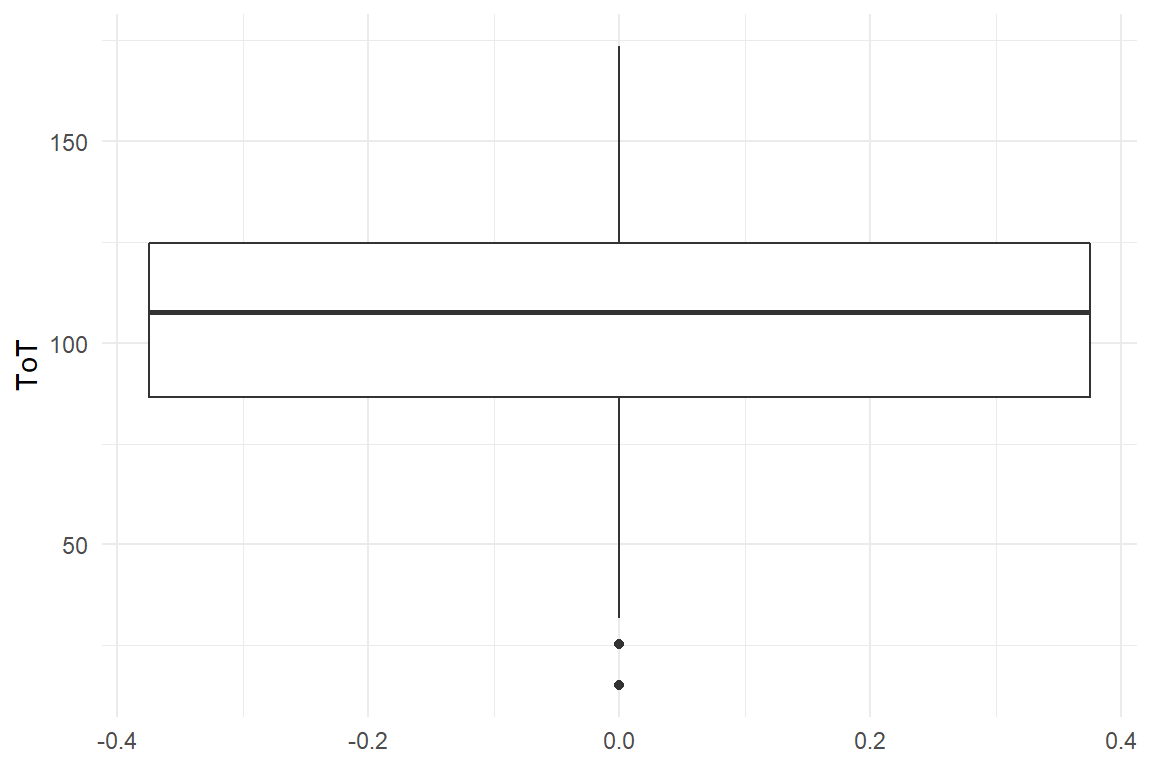

Ver20 %>%

ggplot(aes(y = ToT)) +

geom_boxplot()

Figure 3.9: A boxplot shows quartiles of a distribution

The min/max statistics only uses just these two values and therefore does not fully represent the amount of dispersion. A statistic for dispersion that exploits the full data is the variance, which is the mean of squared deviations from the mean. Squaring the deviations makes variance difficult to interpret, as it no longer is on the same scale as the measures. The standard deviation solves this problem by taking the square root of variance. By reversing the square the standard deviation is on the same scale as the original measures and can easily be compared to the mean (Table 3.17) .

min <- function(x) sort(x)[1]

max <- function(x) quantile(x, 1)

range <- function(x) max(x) - min(x)

var <- function(x) mean((mean(x) - x)^2)

sd <- function(x) sqrt(var(x))

Ver20 %>%

summarize(

min(ToT),

max(ToT),

range(ToT),

var(ToT),

sd(ToT)

) | min(ToT) | max(ToT) | range(ToT) | var(ToT) | sd(ToT) |

|---|---|---|---|---|

| 15.2 | 174 | 158 | 966 | 31.1 |

3.3.4 Associations

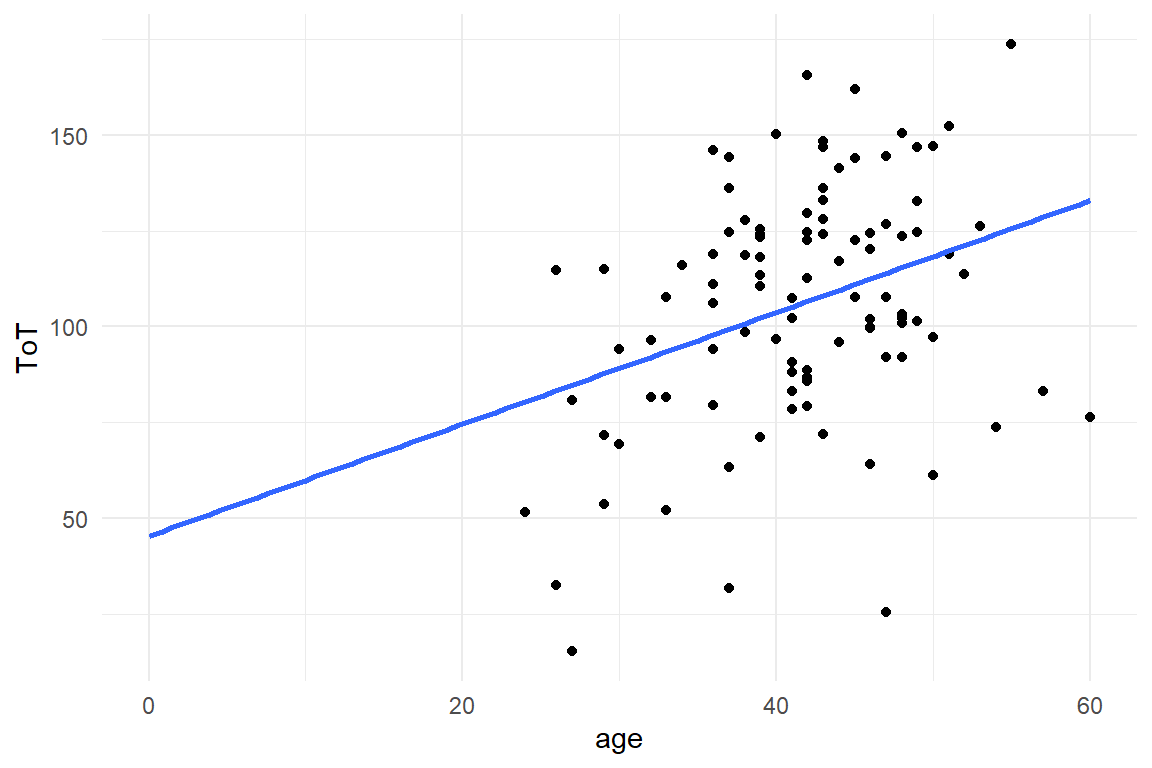

- Are elderly users slower at navigating websites?

- How does reading speed depend on font size?

- Is the result of an intelligence test independent from gender?

In the previous section we have seen how all individual variables can be described by location and dispersion. A majority of research deals with associations between measures and the present section introduces some statistics to describe them. Variables represent properties of the objects of research and fall into two categories: Metric variables represent a measured property, such as speed, height, money or perceived satisfaction. Categorical variables put observations (or objects of research) into non-overlapping groups, such as experimental conditions, persons who can program or cannot, type of education etc. Consequently, associations between any two variables fall into precisely one of three cases, as shown in Table 3.18.

tribble(

~between, ~categorical, ~metric,

"categorical", "frequency cross tables", "differences in mean",

"metric", "", "correlations"

) | between | categorical | metric |

|---|---|---|

| categorical | frequency cross tables | differences in mean |

| metric | correlations |

3.3.4.1 Categorical associations

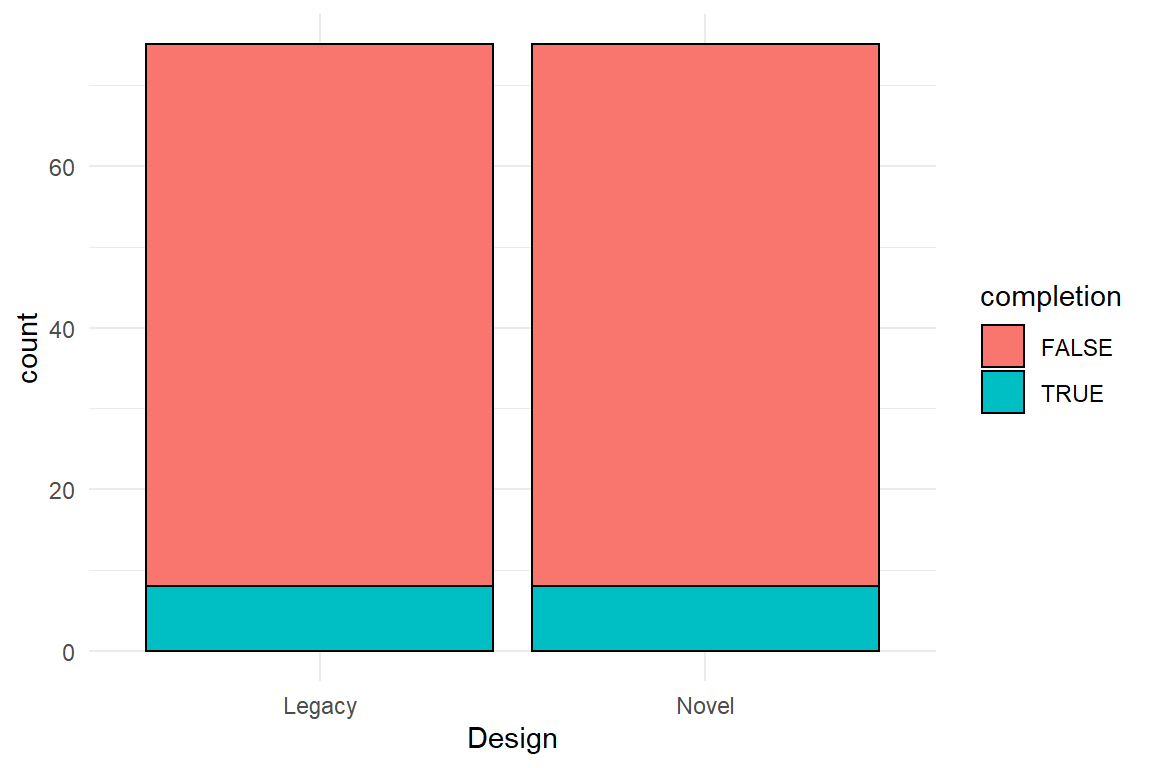

Categorical variables group observations, and when they are both categorical, the result is just another categorical case and the only way to compare them is relative frequencies. To illustrate the categorical-categorical case, consider a study to assess the safety of two syringe infusion pump designs, called Legacy and Novel. All participants of the study are asked to perform a typical sequence of operation on both devices (categorical variable Design) and it is recorded whether the sequence was completed correctly or not (categorical variable Correctness, Table 3.19. ).

attach(IPump)

D_agg %>%

filter(Session == 3) %>%

group_by(Design, completion) %>%

summarize(frequency = n()) %>%

ungroup() %>%

spread(completion, frequency) | Design | FALSE | TRUE |

|---|---|---|

| Legacy | 21 | 4 |

| Novel | 22 | 3 |

Besides the troubling result that incorrect completion is the rule, not the exception, there is almost no difference between the two designs. Note that in this study, both professional groups were even in number. If that is not the case, absolute frequencies are difficult to compare and we better report relative frequencies. Note how every row sums up to \(1\) in the Table 3.20 :

D_agg %>%

filter(Session == 3) %>%

group_by(Design, completion) %>%

summarize(frequency = n()) %>%

group_by(Design) %>%

mutate(frequency = frequency / sum(frequency)) %>%

ungroup() %>%

spread(completion, frequency) | Design | FALSE | TRUE |

|---|---|---|

| Legacy | 0.84 | 0.16 |

| Novel | 0.88 | 0.12 |

In addition, absolute or relative frequencies can be shown in a stacked bar plot (Figure 3.10).

D_agg %>%

ggplot(aes(x = Design, fill = completion)) +

geom_bar()

Figure 3.10: A stacked bar plot with absolute frequencies

3.3.4.2 Categorical-metric associations

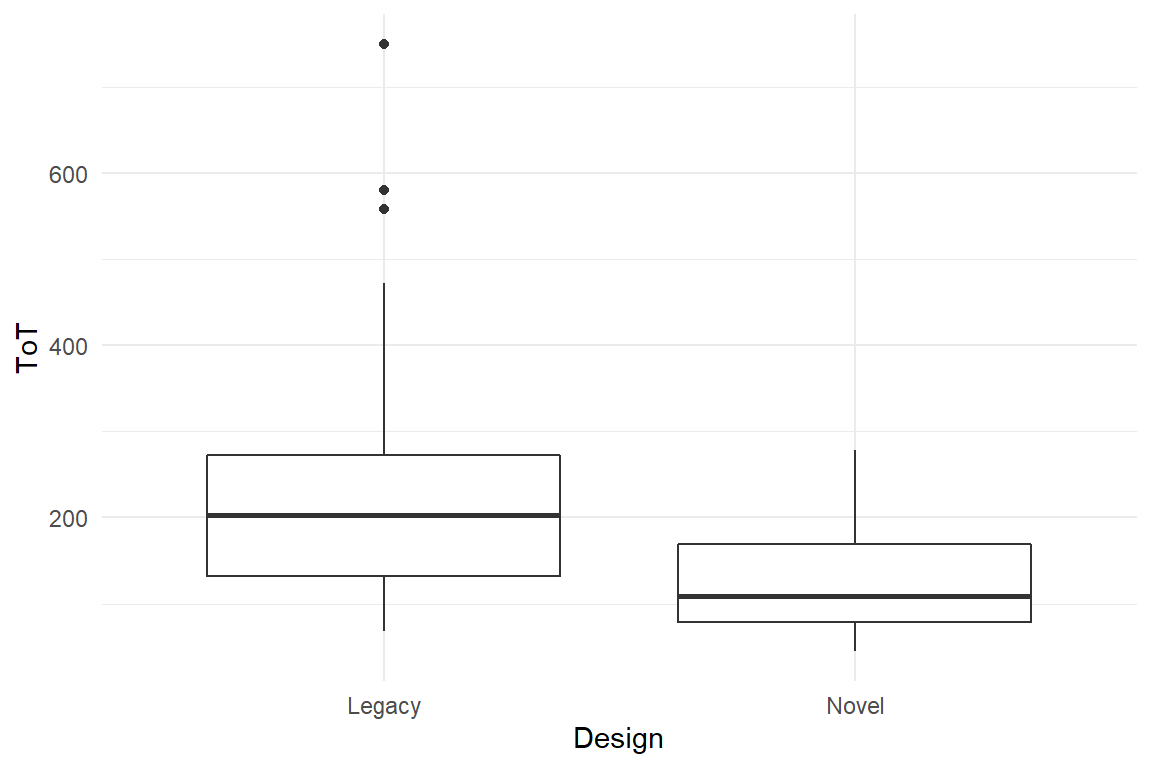

Associations between categorical and metric variables are reported by grouped location statistics. In the case of the two infusion pump designs, the time spent to complete the sequence is compared in comparison-of-means Table 3.21.

D_agg %>%

filter(Session == 3) %>%

group_by(Design) %>%

summarize(

mean_ToT = mean(ToT),

sd_ToT = sd(ToT)

) | Design | mean_ToT | sd_ToT |

|---|---|---|

| Legacy | 151.0 | 62.2 |

| Novel | 87.7 | 33.8 |

For the illustration of categorical-metric associations case, boxplots haven proven useful. Boxplots show differences in central tendency (median) and dispersion (other quartiles) simultaneously. In Figure 3.11, we observe that Novel produces shorter ToT and also seems to be less dispersed.

D_agg %>%

ggplot(aes(x = Design, y = ToT)) +

geom_boxplot()

Figure 3.11: Boxplot comparison of two groups

3.3.4.3 Covariance and correlation

For associations between a pair of metric variables, covariance and correlations are commonly employed statistics.

A covariance is a real number that is zero when there really is no association between two variables. When two variables move into the same direction, covariance gets the positive. When they move in opposite directions, covariance is negative

For an illustration, consider the following hypothetical example of study on the relationship between mental ability and performance in a minimally-invasive surgery (MIS) task. MIS tasks are known to involve a lot of visual-spatial cognition, which means that performance on other visual-spatial tasks should be associated.

The following code simulates such a set of measures from a multivariate-normal distribution. The associations are defined as a matrix of correlations and are then up-scaled by the standard error to result in covariances. Later, we will do the reverse to obtain correlations from covariances.

cor2cov <- function(cor, sd) diag(sd) %*% cor %*% t(diag(sd))

cor_mat <- matrix(c(

1, .95, -.5, .2,

.95, 1, -.5, .2,

-.5, -.5, 1, .15,

.2, .2, .15, 1

), ncol = 4)

sd_vec <- c(.2, .2, 40, 2)

mean_vec <- c(2, 2, 180, 6)

D_tests <-

mvtnorm::rmvnorm(300,

mean = mean_vec,

sigma = cor2cov(cor_mat, sd_vec)

) %>%

as_tibble() %>%

rename(MRS_1 = V1, MRS_2 = V2, ToT = V3, Corsi = V4) %>%

as_tbl_obs()D_tests| Obs | MRS_1 | MRS_2 | ToT | Corsi |

|---|---|---|---|---|

| 17 | 1.97 | 1.93 | 165 | 5.75 |

| 25 | 2.10 | 2.08 | 144 | 6.93 |

| 44 | 2.20 | 2.13 | 125 | 3.31 |

| 58 | 1.70 | 1.68 | 204 | 4.10 |

| 68 | 2.22 | 2.25 | 161 | 5.54 |

| 77 | 2.20 | 2.23 | 108 | 4.62 |

| 225 | 1.91 | 1.75 | 146 | 3.48 |

| 272 | 2.04 | 2.07 | 132 | 8.50 |

The following function computes the covariance of two variables. The covariance between the two MRS scores is positive, indicating that they move into the same direction.

my_cov <- function(x, y) {

mean((x - mean(x)) * (y - mean(y)))

}

my_cov(D_tests$MRS_1, D_tests$MRS_2)## [1] 0.0407The problem with covariances is that, like variances, they are on a square scale (a product of two numbers), which makes them difficult to interpret. This is why later, we will transform covariances into correlations, but for understanding the steps to go there, we have to understand the link between variance and covariance. The formula for covariance is (with \(E(X)\) the mean of \(X\)):

\[ \textrm{cov}_{XY} = \frac{1}{n} \sum_{i=1}^n (x_i - E(X)) (y_i - E(Y)) \]

Covariance essentially arises by the multiplication of differences to the mean, \((x_i - E(X)) (y_i - E(Y)\). When for one observation both factors go into the same direction, be it positive or negative, this term gets positive. If the association is strong, this will happen a lot, and the whole sum gets largely positive. When the deviations systematically move in opposite direction, such that one factor is always positive and the other negative, we get a large negative covariance. When the picture is mixed, i.e. no clear tendency, covariance will stay close to zero.

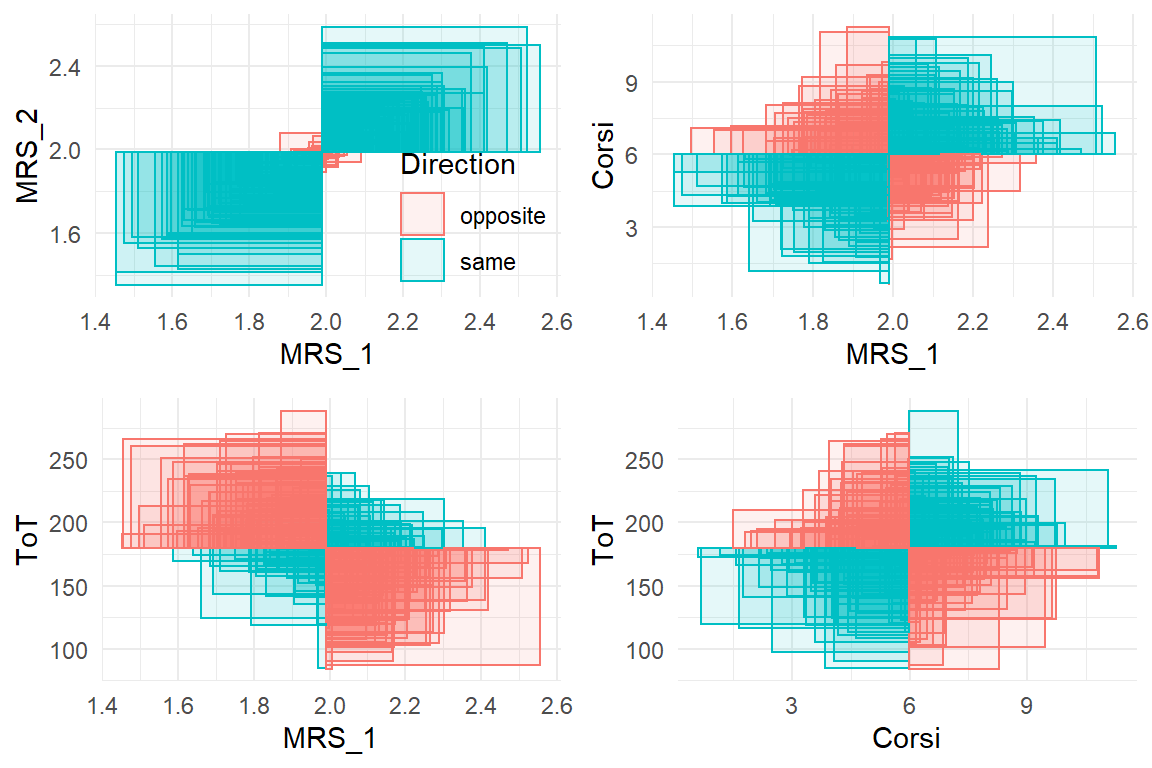

Figure 3.12 is an attempt at a geometric illustration of the multiplication as the area of rectangles. Rectangles with equal directions (blue) are in the upper-right and lower-left quadrant. They overwhelm the opposite direction rectangles (red), which speaks for a strong positive association. The associations between MRT_1 and Corsi, as well as between Corsi and ToT seem to have a slight overhead in same direction, so the covariance is positive, but less strong. A clear negative association exists between MRS_1 and Corsi. It seems these two tests have some common ground.

Figure 3.12: Illustration of covariance. Every rectangle represents a product of two measures. Same-direction rectangles have a positive area, opposite-direction rectangles a negative. The sum of all rectangles is the covariance.

If we compare the formulas of covariance and variance, it is apparent that variance is just covariance of a variable with itself (\((x_i - E(X))^2 = (x_i - E(X))(x_i - E(X))\):

That gives rise to a compact form to show all covariances and variances between a bunch of variables at once. Table 3.23 is a variance-covariance matrix produced by the command cov. It shows the variance of every variable in the diagonal and the mutual covariances in the off-diagonal cells.

cov(D_tests) | Obs | MRS_1 | MRS_2 | ToT | Corsi | |

|---|---|---|---|---|---|

| Obs | 7525.00 | 1.106 | 1.159 | -33.50 | 11.971 |

| MRS_1 | 1.11 | 0.043 | 0.041 | -4.25 | 0.106 |

| MRS_2 | 1.16 | 0.041 | 0.043 | -4.13 | 0.104 |

| ToT | -33.50 | -4.246 | -4.127 | 1515.49 | 7.615 |

| Corsi | 11.97 | 0.106 | 0.104 | 7.62 | 4.185 |

As intuitive the idea of covariance is, as unintelligible is the statistic itself for reporting results. Th problem is that covariance is not a pure measure of association, but is contaminated by the dispersion of \(X\) and \(Y\). For that reason, two covariances can only be compared if the variables have the same variance. As this is usually not the case, it is impossible to compare covariances. The Pearson correlation coefficient \(r\) solves the problem by rescaling covariances by the product of the two standard deviations:

\[ r_XY = \frac{\textrm{cov}_{XY}}{\textrm{sd}_X \textrm{sd}_Y} \]

my_cor <- function(x, y) {

cov(x, y) / (sd(x, na.rm = T) * sd(y, na.rm = T))

}

my_cor(D_tests$MRS_1, D_tests$MRS_2)## [1] 0.952Due to the standardization of dispersion, \(r\) will always be in the interval \([-1,1]\) and can be used to evaluate or compare strength of association, independent of scale of measurement.

That makes it the perfect choice when associations are being compared to each other or to an external standard. In the field of psychometrics, correlations are ubiquitously employed to represent reliability and validity of psychological tests (6.8). Test-retest stability is one form to measure reliability and it is just the correlation of the same test taken on different days. For example, we could ask whether mental rotation speed as measured by the mental rotation task (MRT) is stable over time, such that we can use it for long-term predictions, such as how likely someone will become a good surgeon. Validity of a test means that it represents what it was intended for, and that requires an external criterion that is known to be valid. For example, we could ask how well the ability of a person to become a minimally invasive surgeon depends on spatial cognitive abilities, like mental rotation speed. Validity could be assessed by taking performance scores from exercises in a surgery simulator and do the correlation with mental rotation speed. A correlation of \(r = .5\) would indicate that mental rotation speed as measured by the task has rather limited validity. Another form is called discriminant validity and is about how specific a measure is. Imagine another test as part of the surgery assessment suite. This test aims to measure another aspect of spatial cognition, namely the capacity of the visual-spatial working memory (e.g., the Corsi block tapping task). If both tests are as specific as they claim to be, we would expect a particularly low correlation.

And similar to covariances, correlations between a set of variables can be put into a correlation table, such as Table 3.24 . This time, the diagonal is the correlation of a variable with itself, which is perfect correlation and therefore equals 1.

cor(D_tests) | Obs | MRS_1 | MRS_2 | ToT | Corsi | |

|---|---|---|---|---|---|

| Obs | 1.000 | 0.062 | 0.065 | -0.010 | 0.067 |

| MRS_1 | 0.062 | 1.000 | 0.952 | -0.526 | 0.250 |

| MRS_2 | 0.065 | 0.952 | 1.000 | -0.513 | 0.245 |

| ToT | -0.010 | -0.526 | -0.513 | 1.000 | 0.096 |

| Corsi | 0.067 | 0.250 | 0.245 | 0.096 | 1.000 |

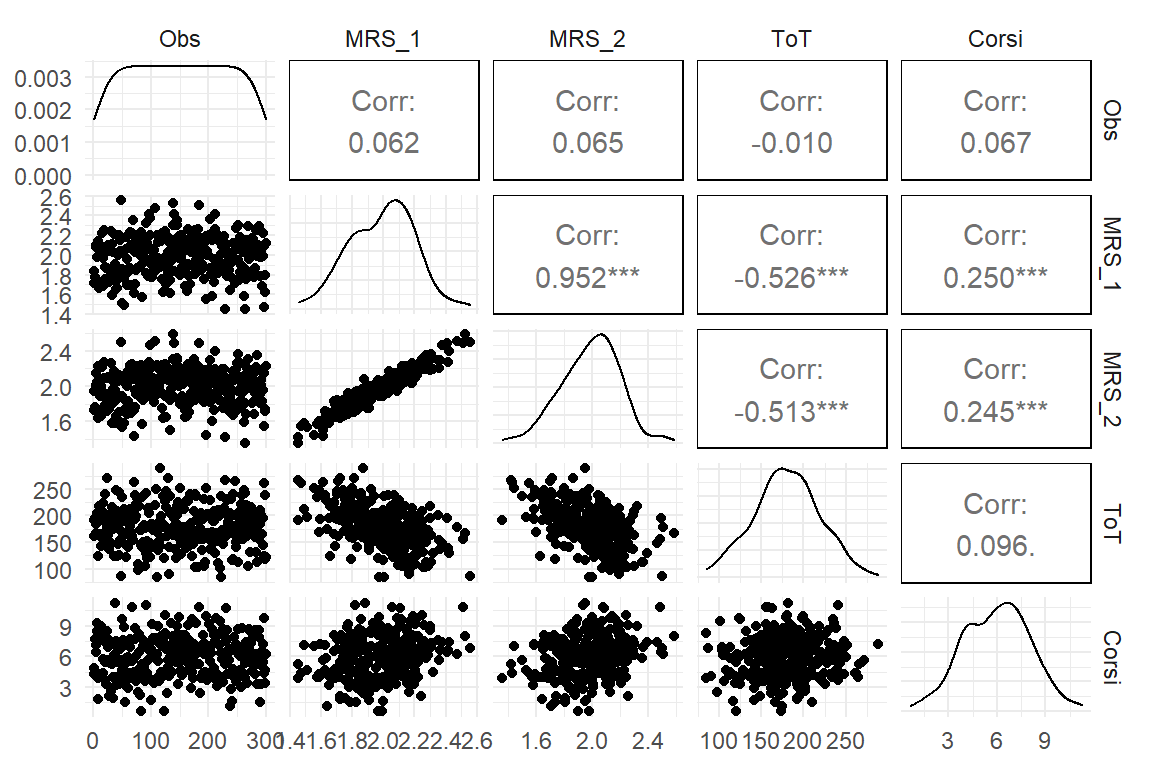

Another way to illustrate a bunch of correlations is shown in Figure 3.13. It was produced by the ggpairs command from the GGally package. For entirely continuous measures it shows:

- the association between a pair of variables as a scatter plot and a the correlation coefficient

- the observed distribution of every measure as a density plot

(In earlier versions of the command, correlations were given without p-values. In my opinion, forcing p-values on the user was not a good choice.)

D_tests %>%

GGally::ggpairs(upper = )

Figure 3.13: A pairs plot showing raw data (left triangle), correlations (right) and individual distribution (diagonal)

Correlations give psychometricians a comparable standard for the quality of measures, irrespective on what scale they are. In exploratory analysis, one often seeks to get a broad overview of how a bunch of variables is associated. Creating a correlation table of all variables is no hassle and allows to get a broad picture of the situation.

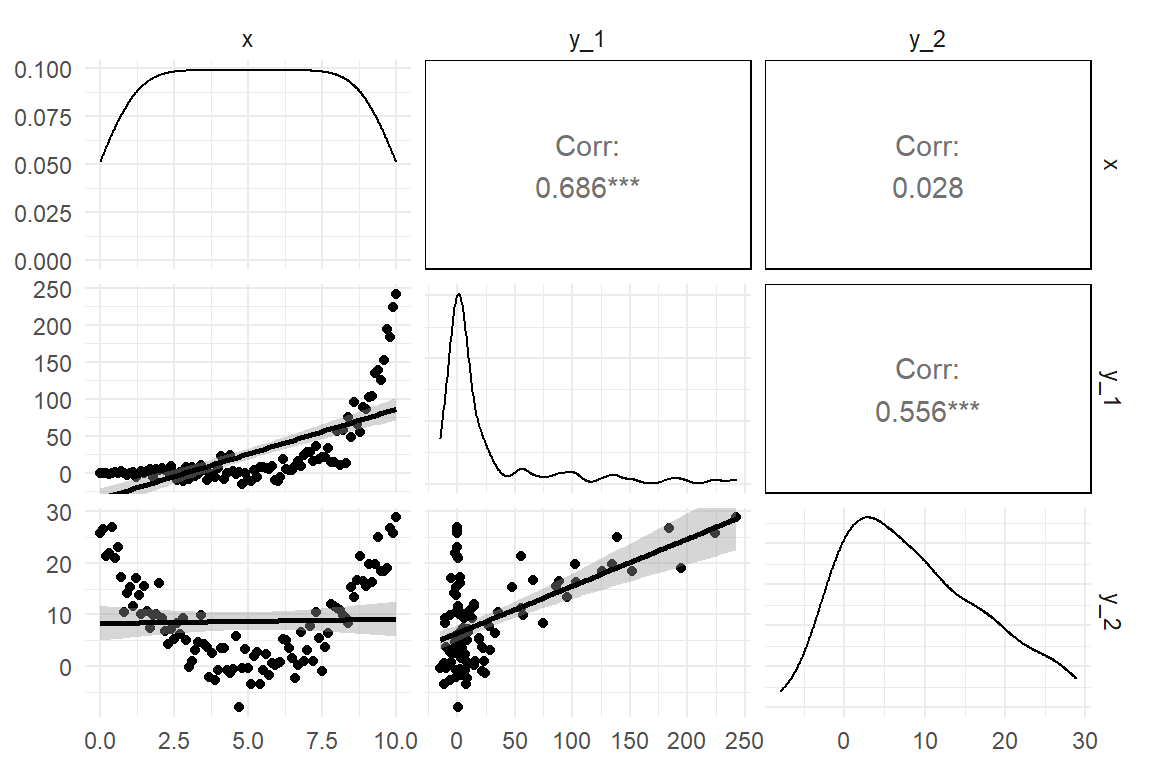

While correlations are ubiquitous in data analysis, they do have limitations: First, a correlation only uncovers linear trends, whereas the association between two variables can take any conceivable form. The validity of correlations depends on how salient the feature of linear trend is. Figure 3.14 shows a few types of associations where correlations are not adequate. In the example, \(Y_2\) is associated with \(X\) in a parabolic form, which results in zero correlation. Also, the curvature of an exponentially rising association (\(Y_1\)) is captured insufficiently. For that reason, I recommend that correlations are always cross-checked by a scatterplot. Another situation where covariances and correlations fail is when there simply is no variance. It is almost trivial, but for observing how a variable \(Y\) changes when \(X\) moves is that both variables actually vary. There simply is no co-variance without variance.

tibble(

x = (0:100) / 10,

y_1 = rnorm(101, exp(x) / 100, x * 2),

y_2 = rnorm(101, (x - 5)^2, 3)

) %>%

ggpairs(lower = list(continuous = "smooth"))

Figure 3.14: Some shapes of associations where correlation is not adequate

As we have seen, for every combination of two categorical and metric variables, we can produce summary statistics for the association, as well as graphs.

While it could be tempting to primarily use summary statistics and rather omit statistical graphs, the last example makes clear that some statistics like correlation are making assumptions on the shape the association. The different graphs we have seen are much less presupposing and can therefore be used to check the assumptions of statistics and models.

3.4 Bayesian probability theory

Mathematics is emptiness. In its purest form, it does not require or have any link to the real world. That makes math so difficult to comprehend, and beautifully strange at the same time. Sometimes a mathematical theory describes real world phenomena, but we have no intuition about it. A classic example is Einstein’s General Relativity Theory, which assumes a curved space, rather than the straight space our senses are tuned to. Our minds are Newtonian and the closest to intuitive understanding we can get is the imagination of the universe as a four-dimensional mollusk, thanks to Einstein.

Math can also be easy, even appear trivial, if mathematical expressions directly translate into familiar ideas and sensations. I recall how my primary school teacher introduced the sum of two numbers as removing elements from one stack and place it on second (with an obvious stop rule). Later, as a student, I was taught how the sum of two numbers is defined within the Peano axiomatic theory of Natural Numbers. As it turned out, I knew this already, because they just formalized the procedure I was taught as a kid. The formal proof for \(1 + 1 = 2\) is using just the same elements as me shifting blocks between towers.

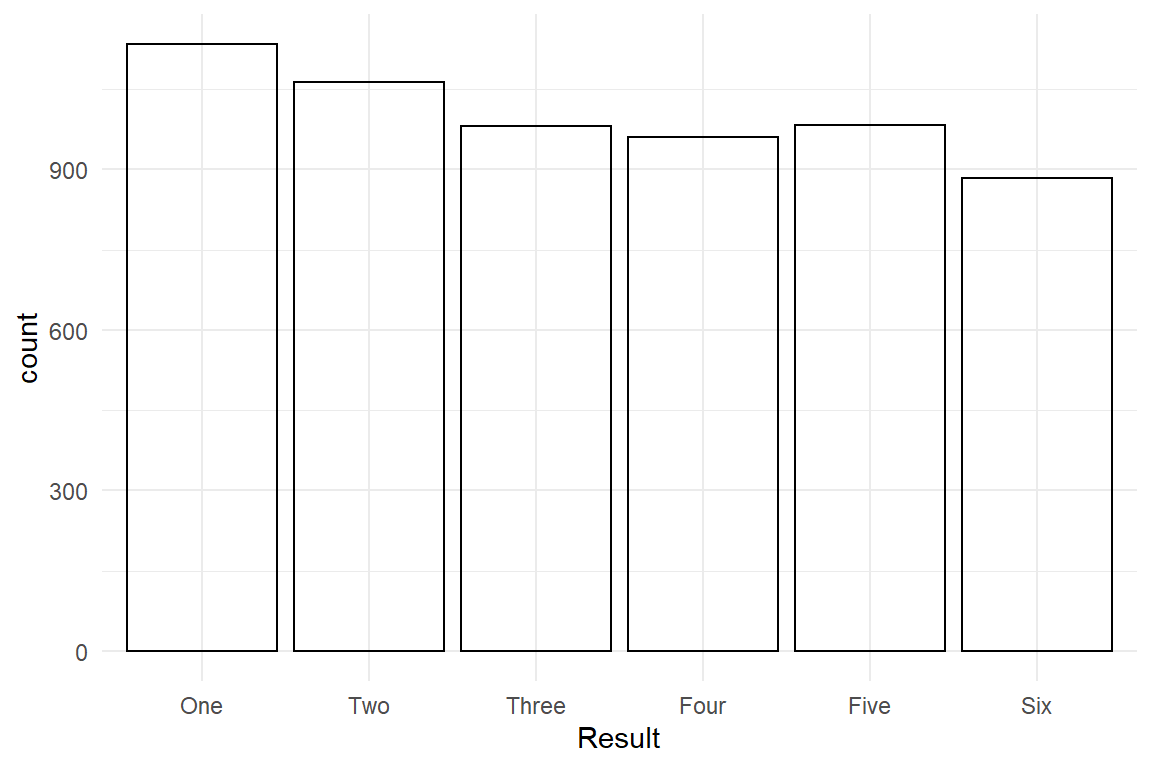

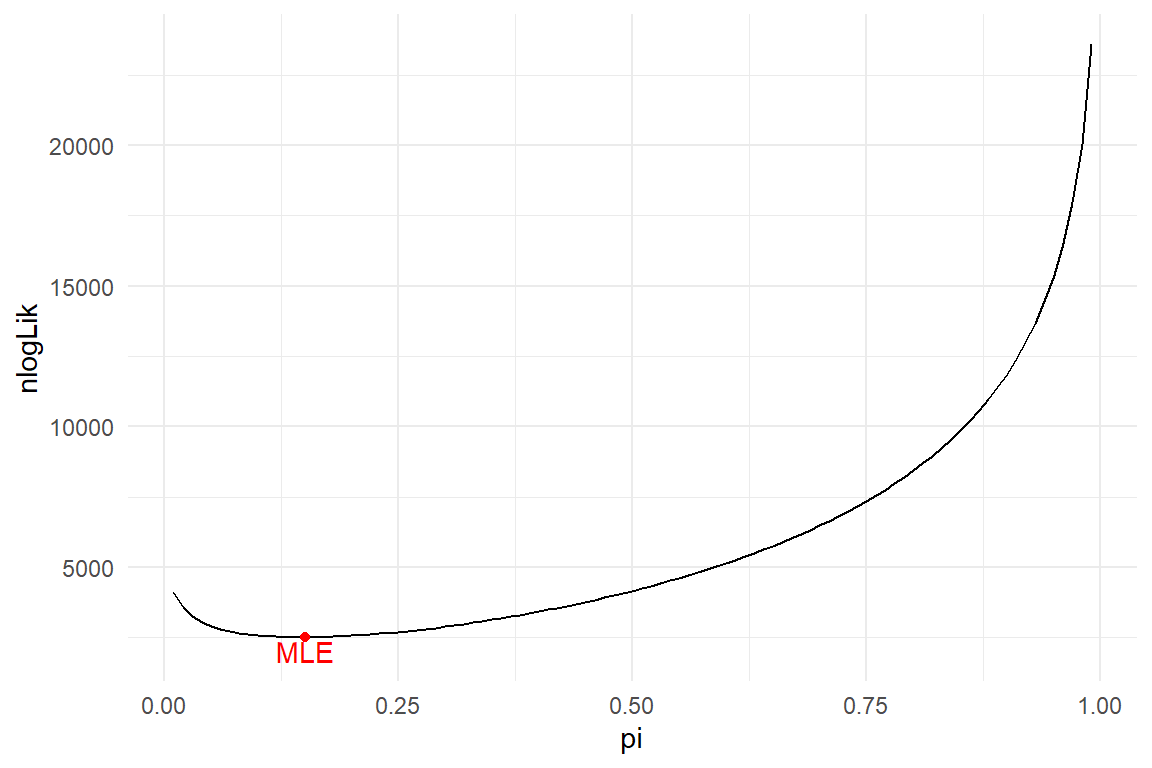

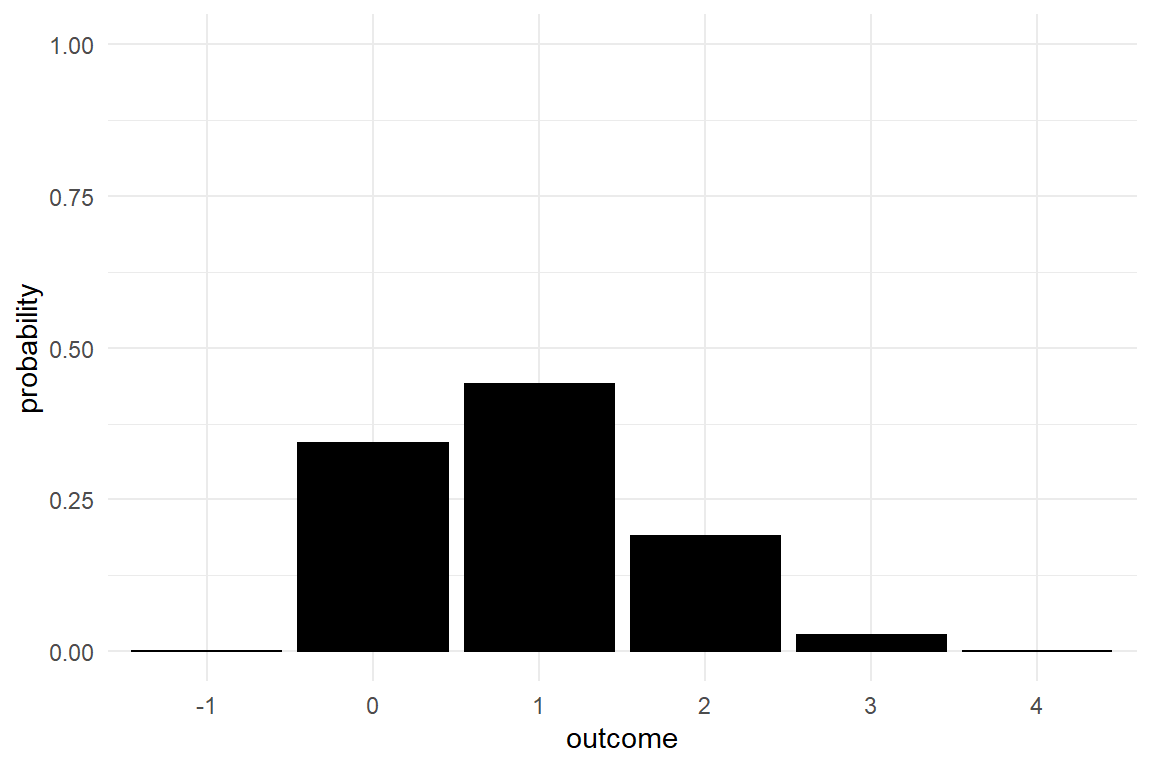

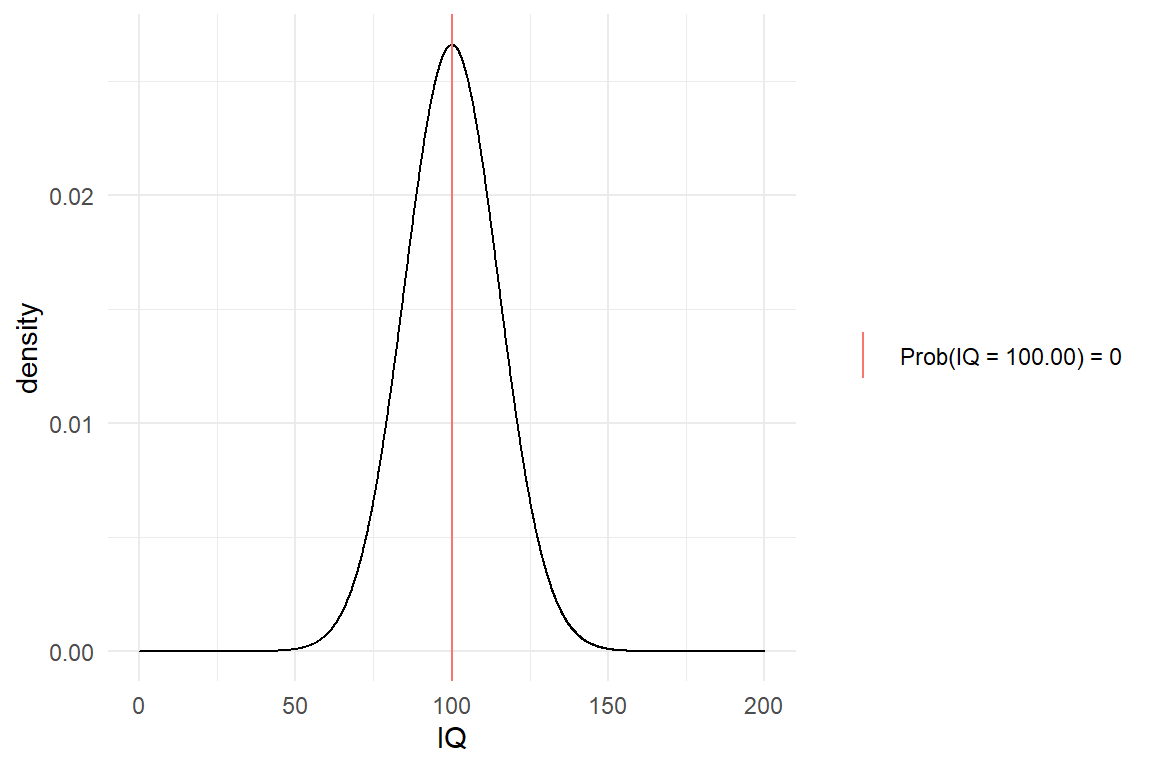

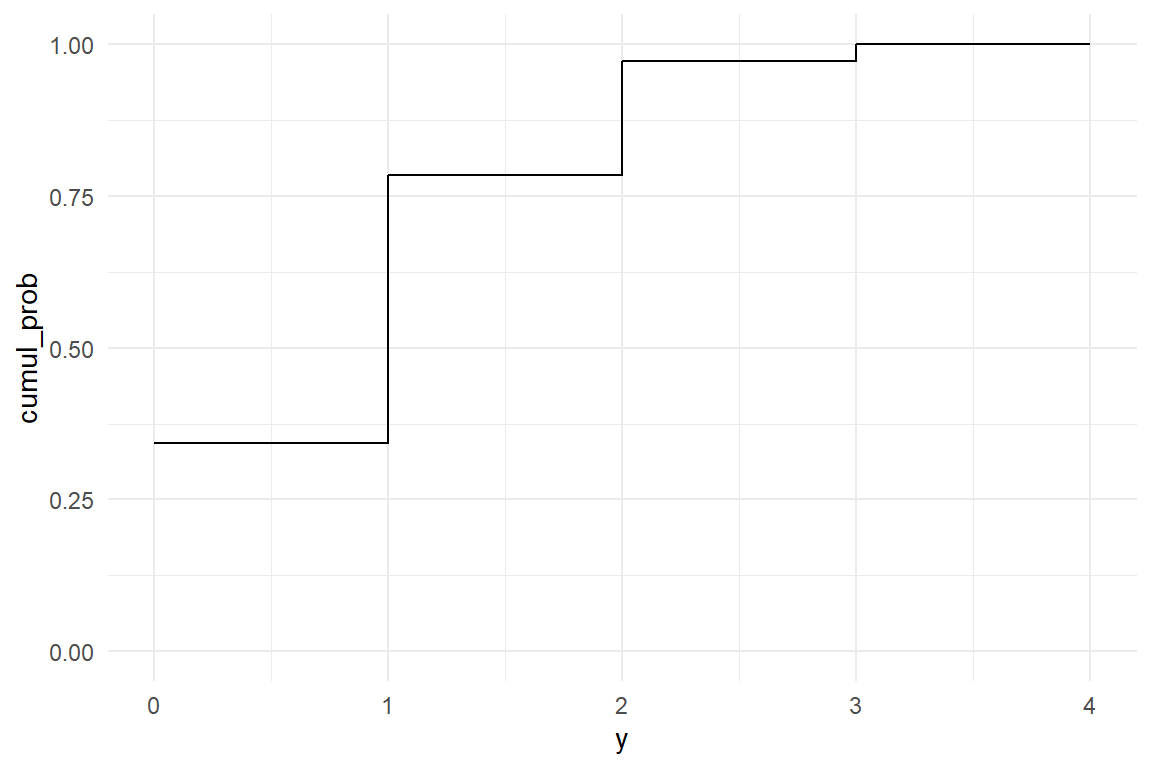

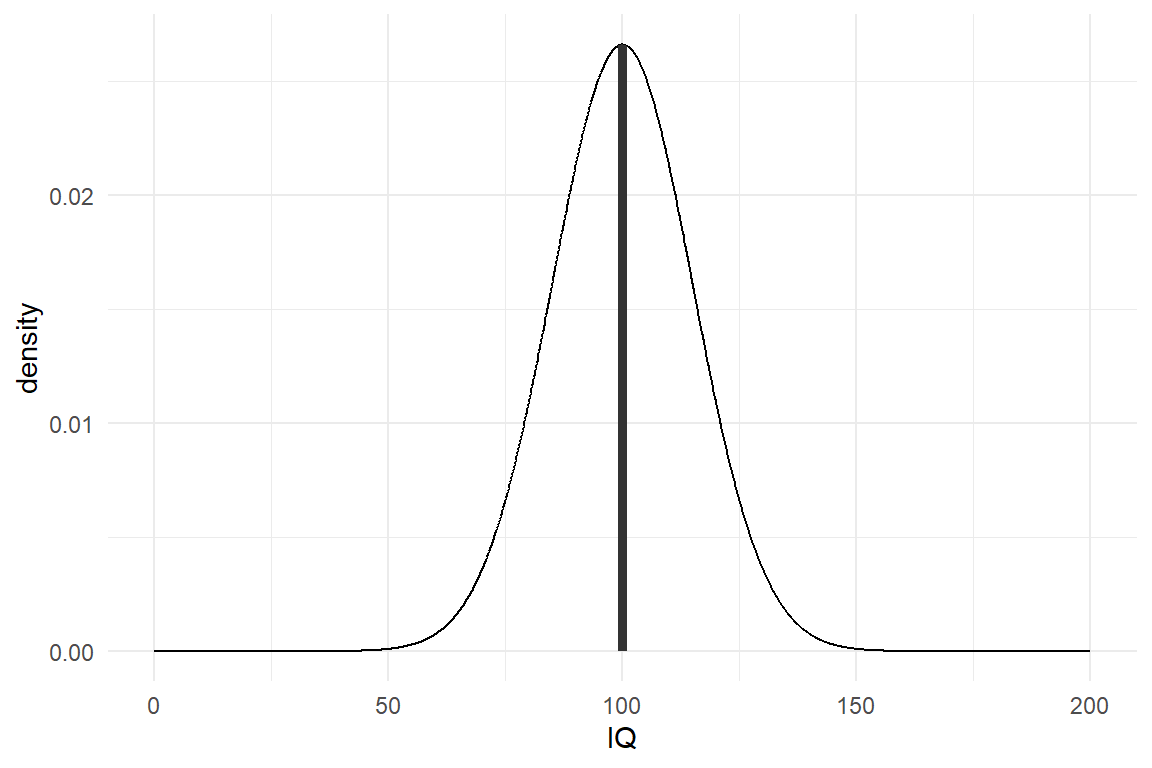

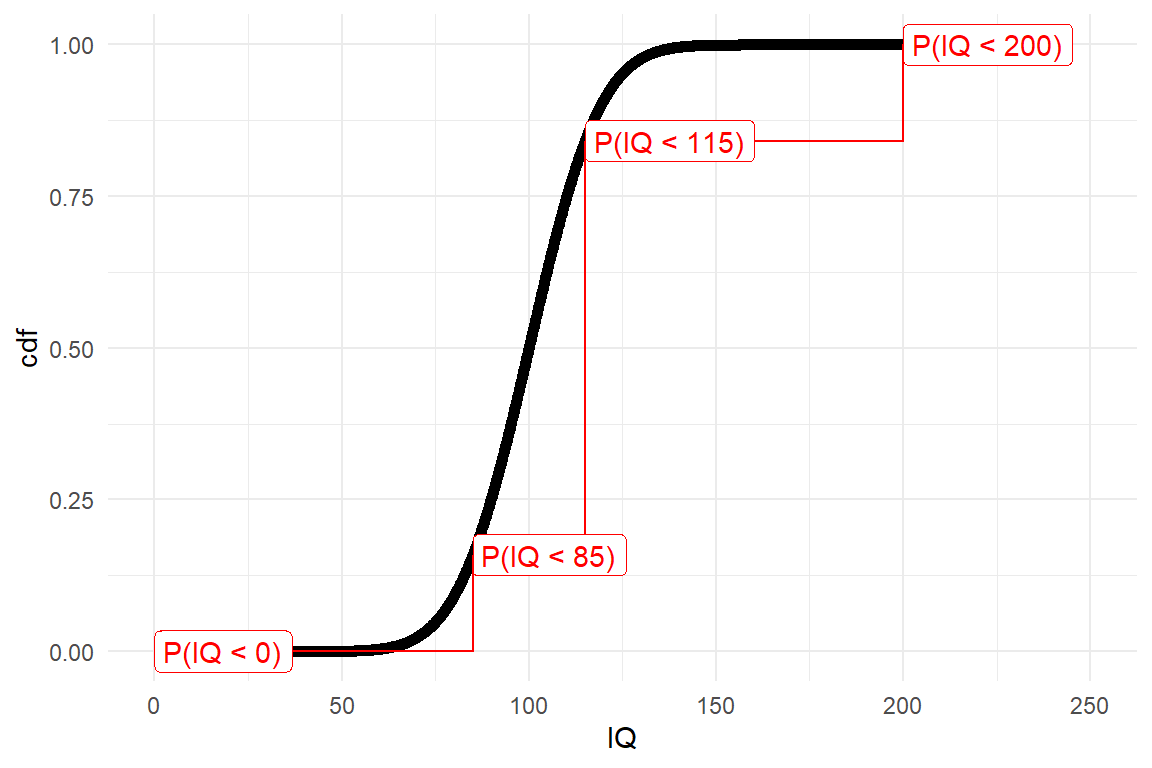

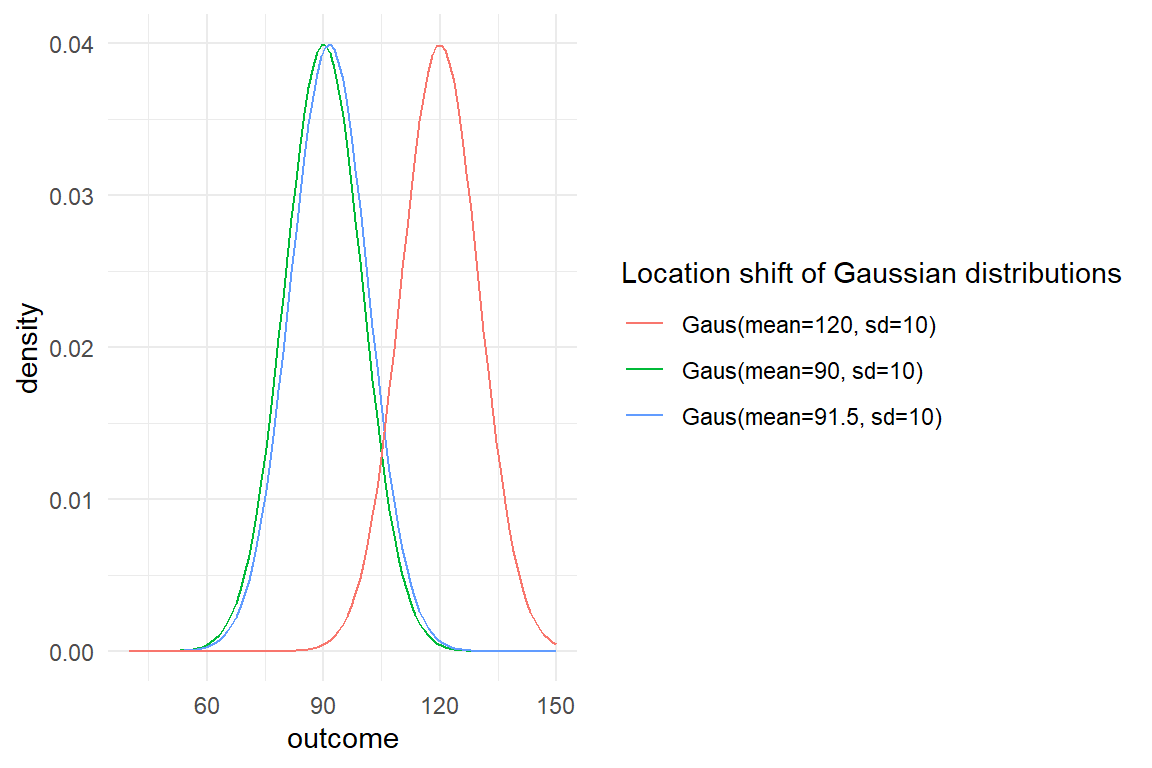

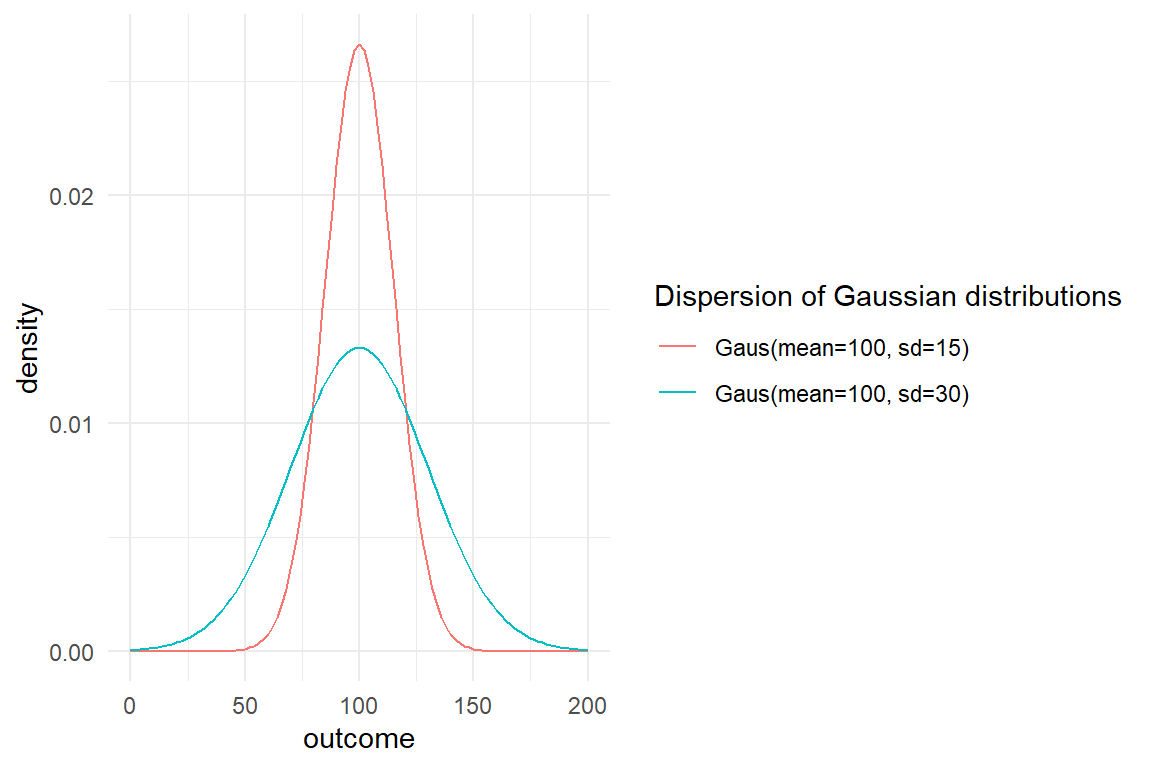

In this section I will introduce Probability theory, which is largely based on another mathematical theory that many people find intuitive, Set theory. The formal theory of probability, the Kolmogorov axioms may be somewhat disappointing from an ontological perspective, as it just defines rules for when a set of numbers can be regarded probabilities. But calculating actual probabilities is rather easy and a few R commands will suffice to start playing with set theory and probability. The most tangible interpretation of probabilities is that the probability of an event to happen, say getting a Six when rolling a dice, coincides with the relative frequency of Six in a (very long) sequence of throws. This is called the frequentist interpretation of probability and this is how probability will be introduced in the following. While thinking in terms of relative frequency in long running sequences is rather intuitive, it has limitations. Not all events we want to assign a probability can readily be imagined as a long running sequence, for example:

- the probability that your house burns down (you only have this one)

- the probability that a space ship will safely reach Mars (there’s only this one attempt)

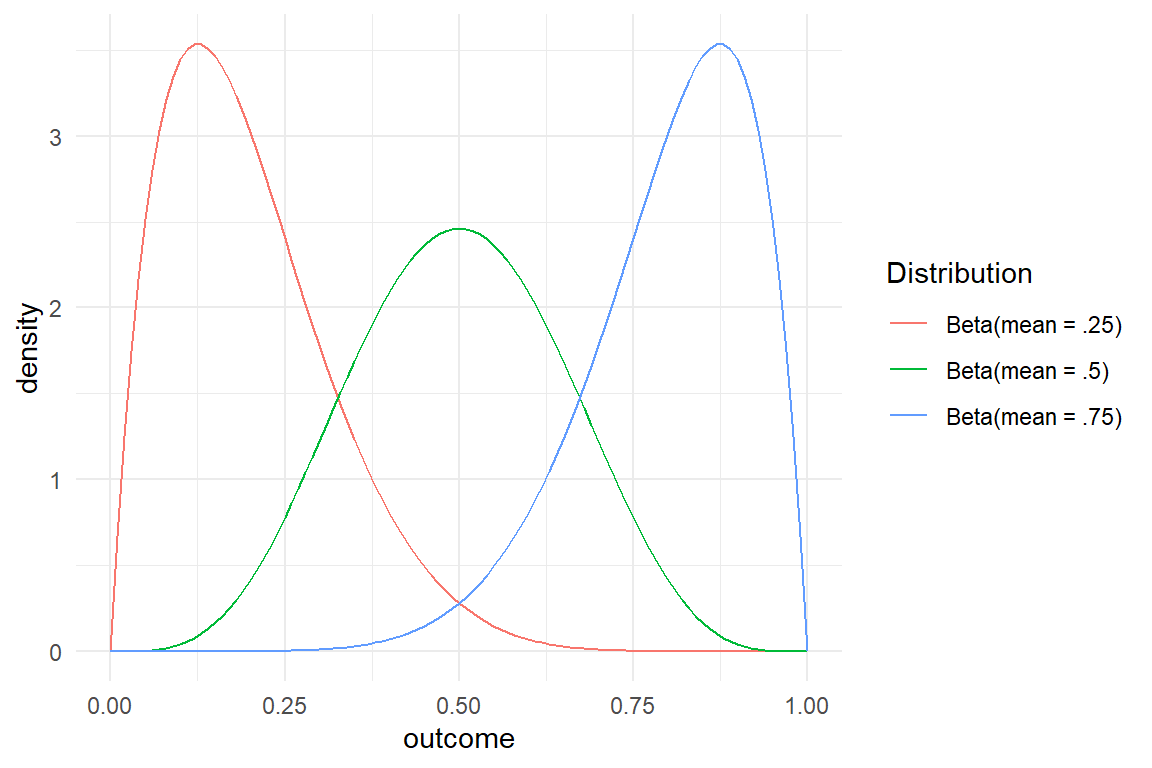

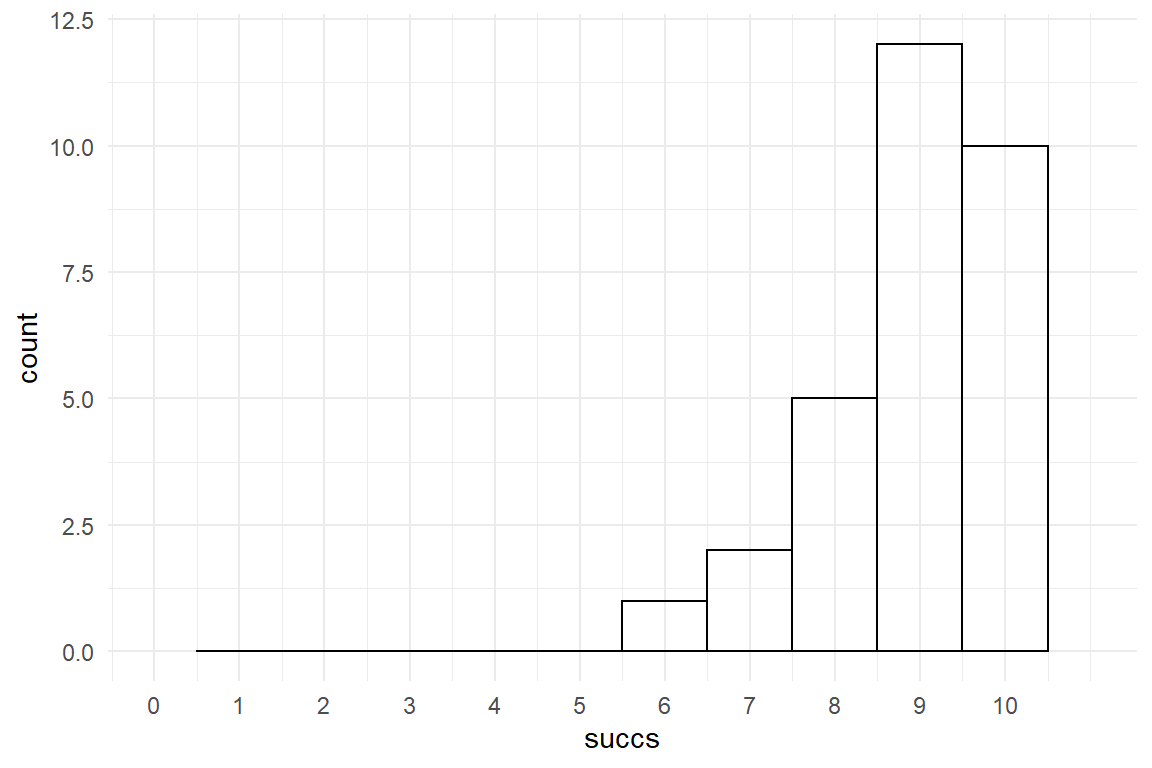

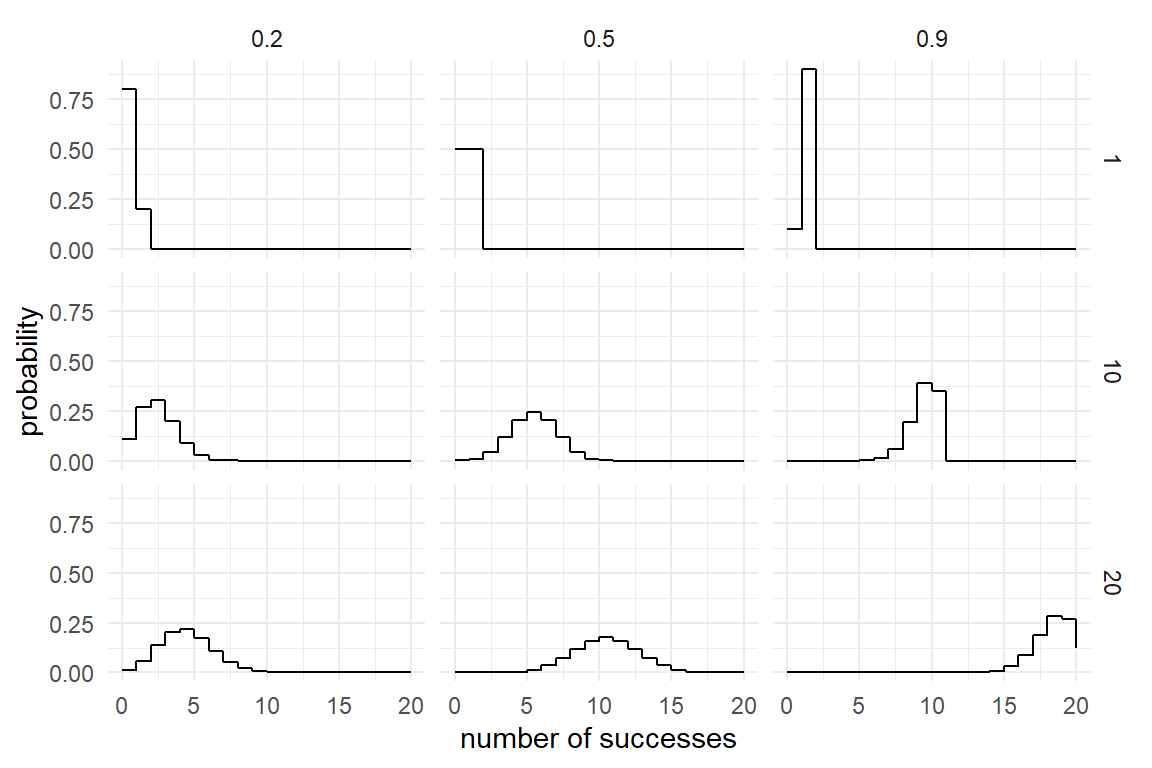

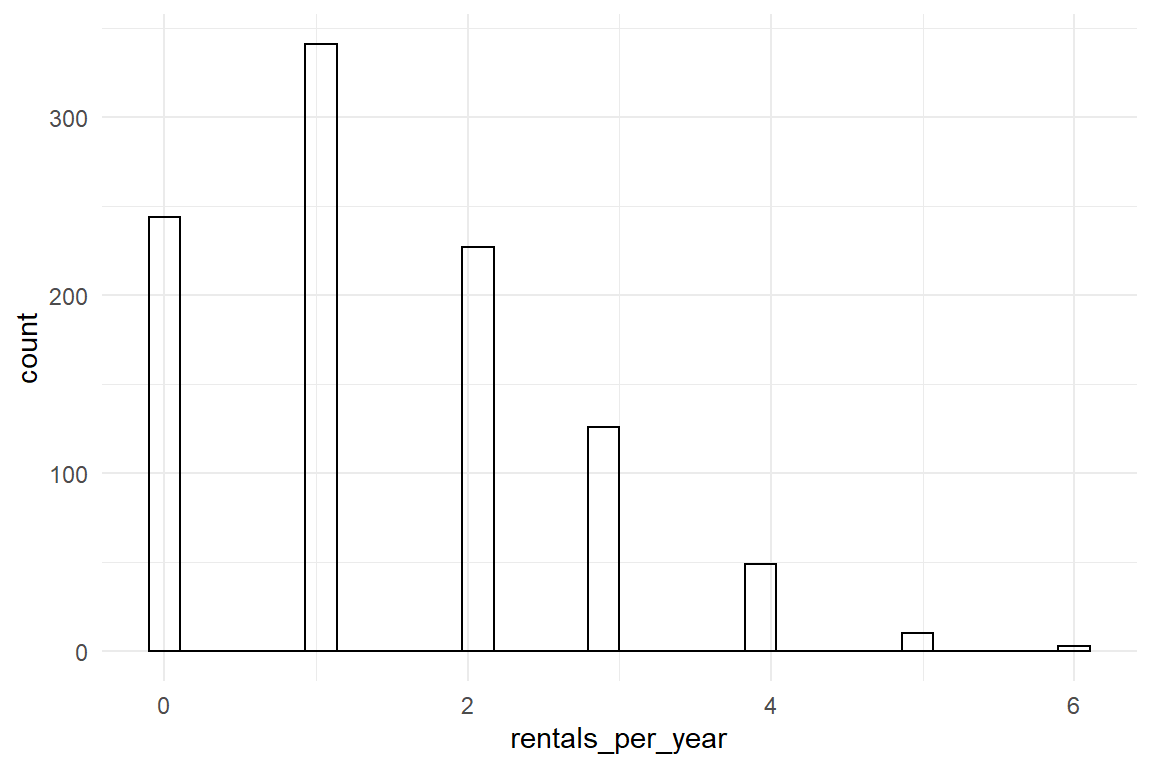

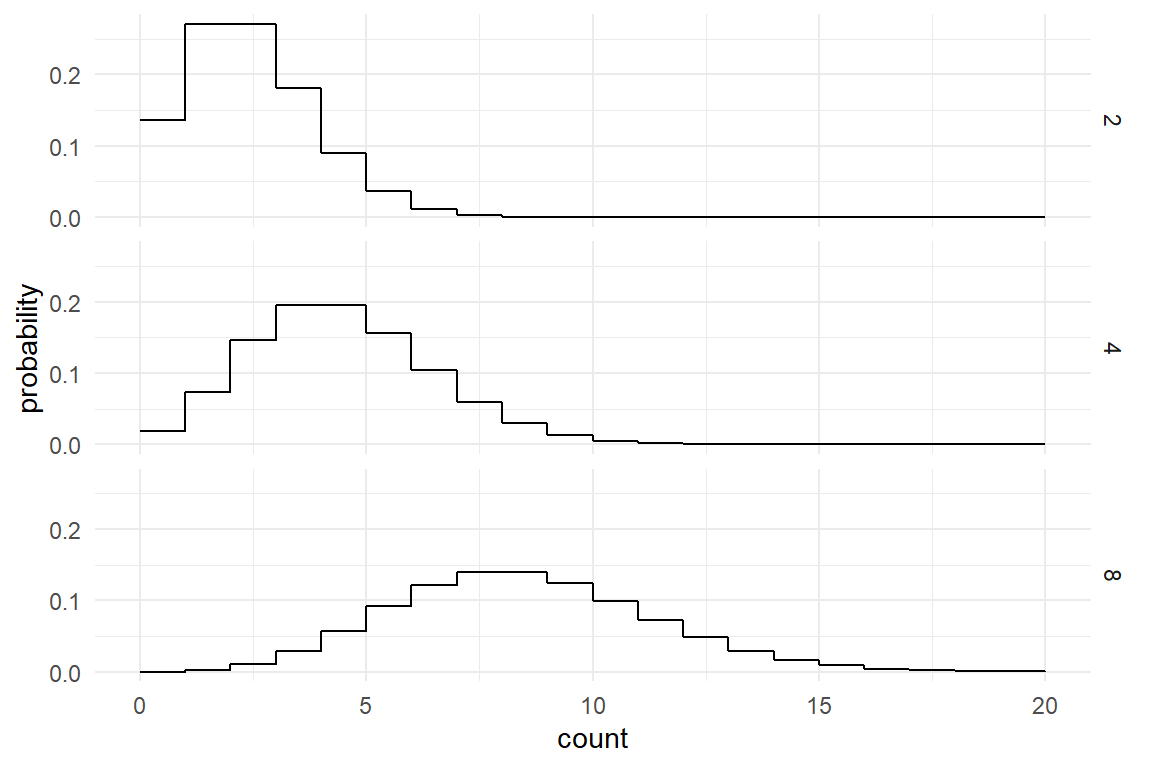

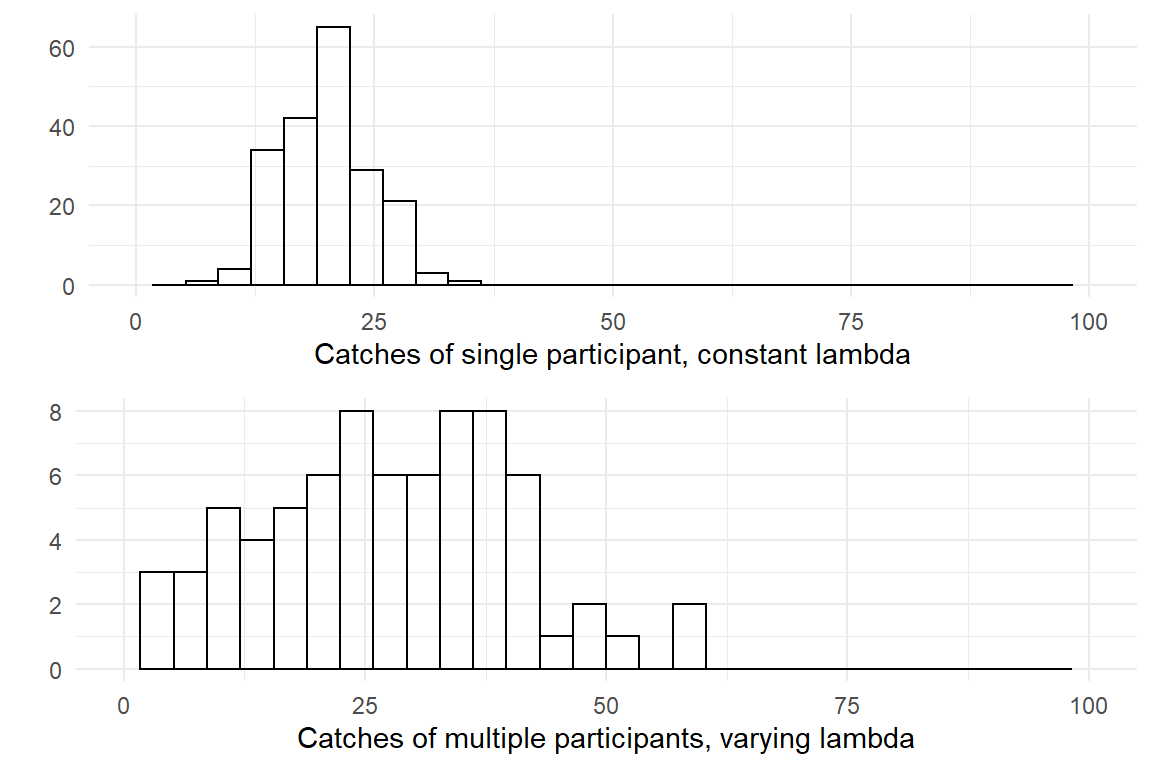

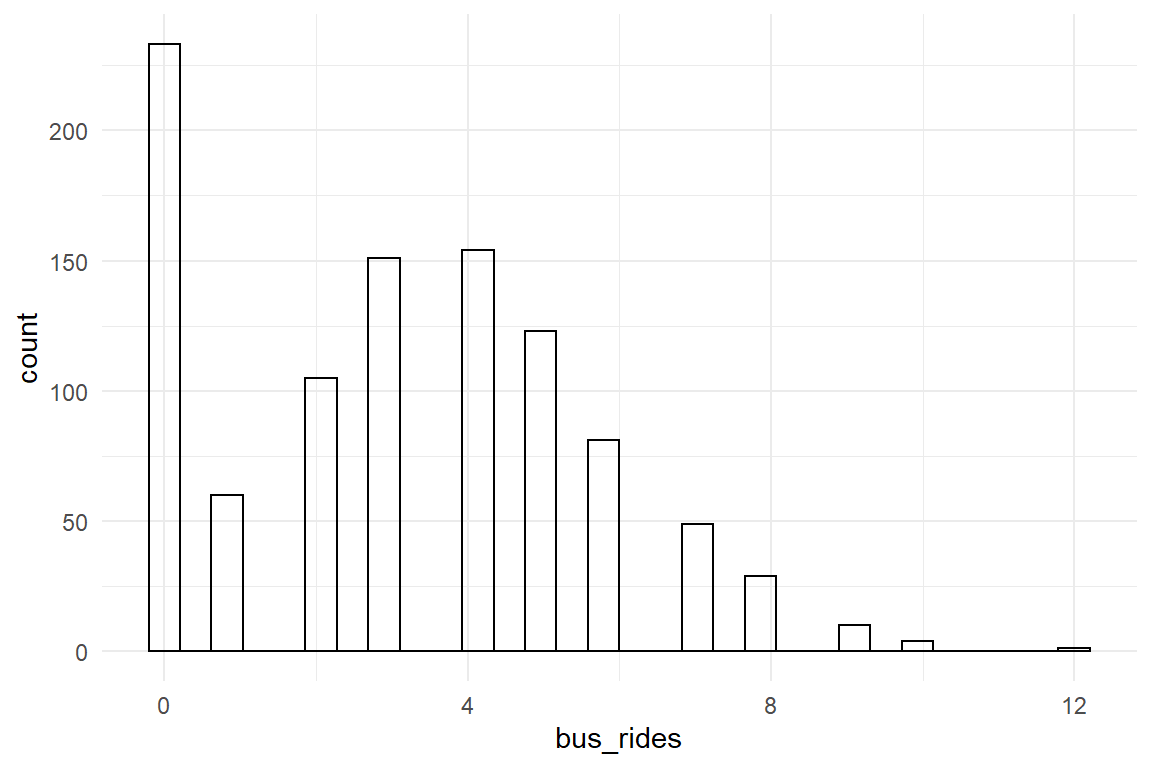

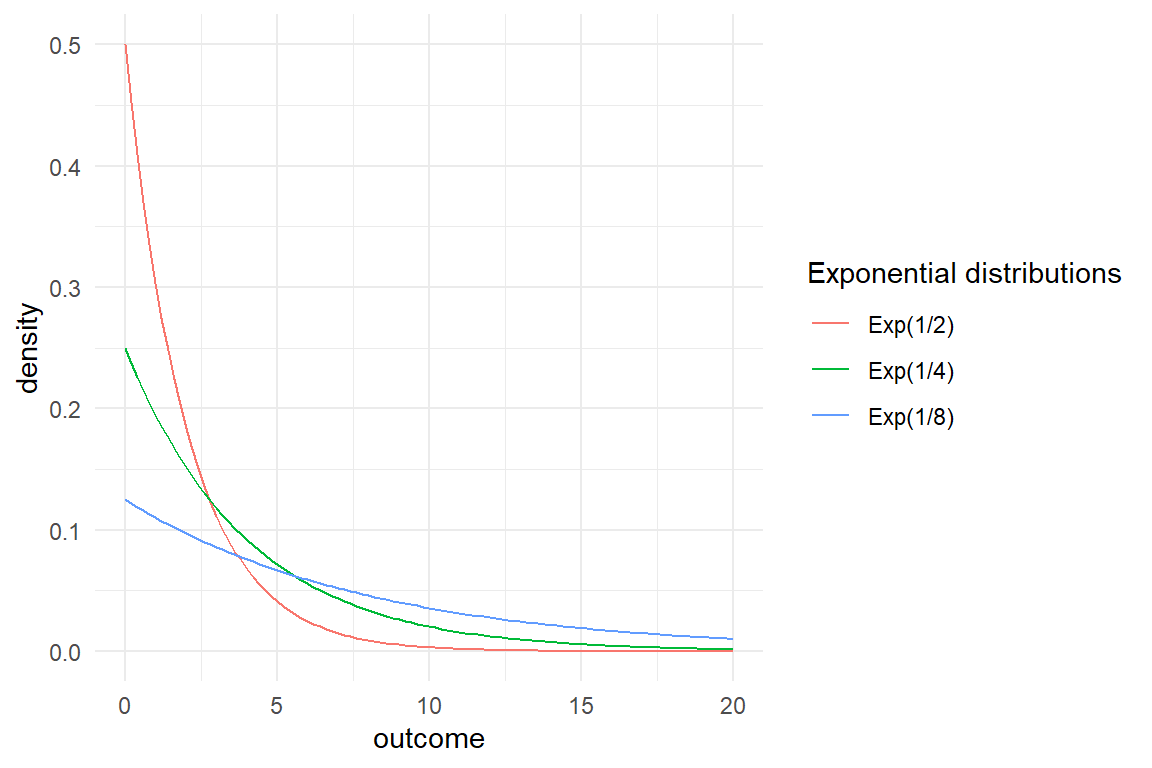

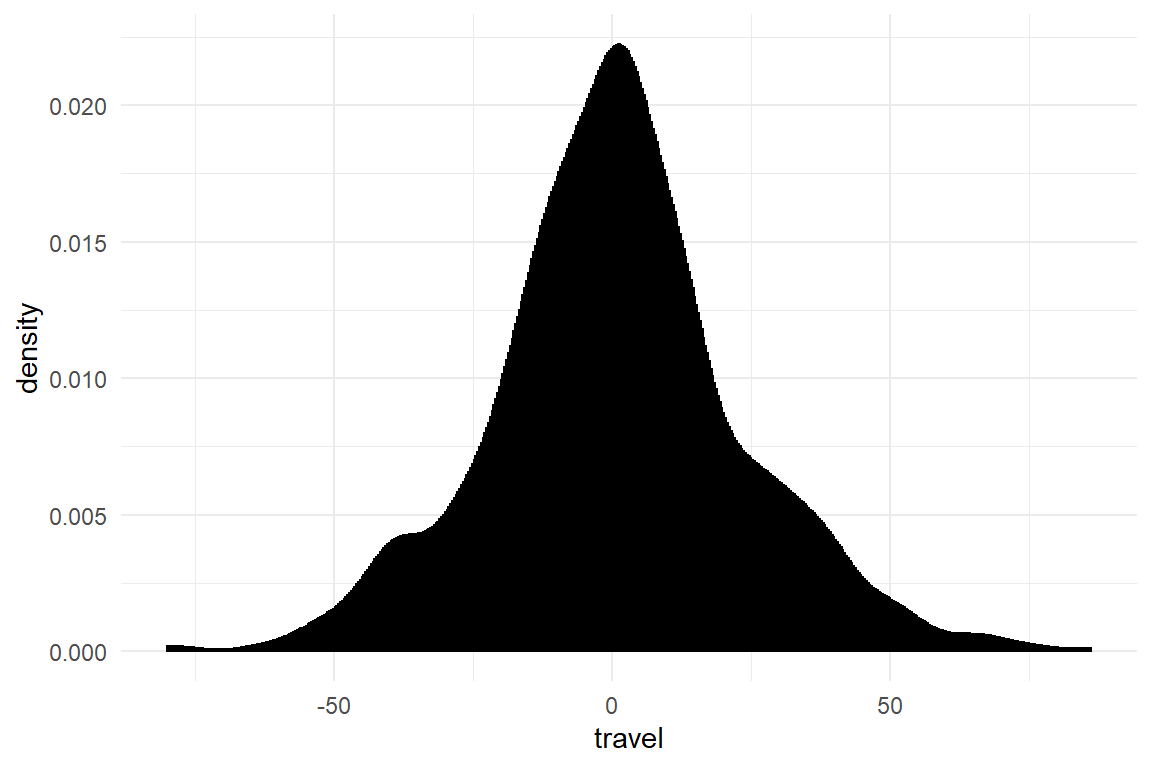

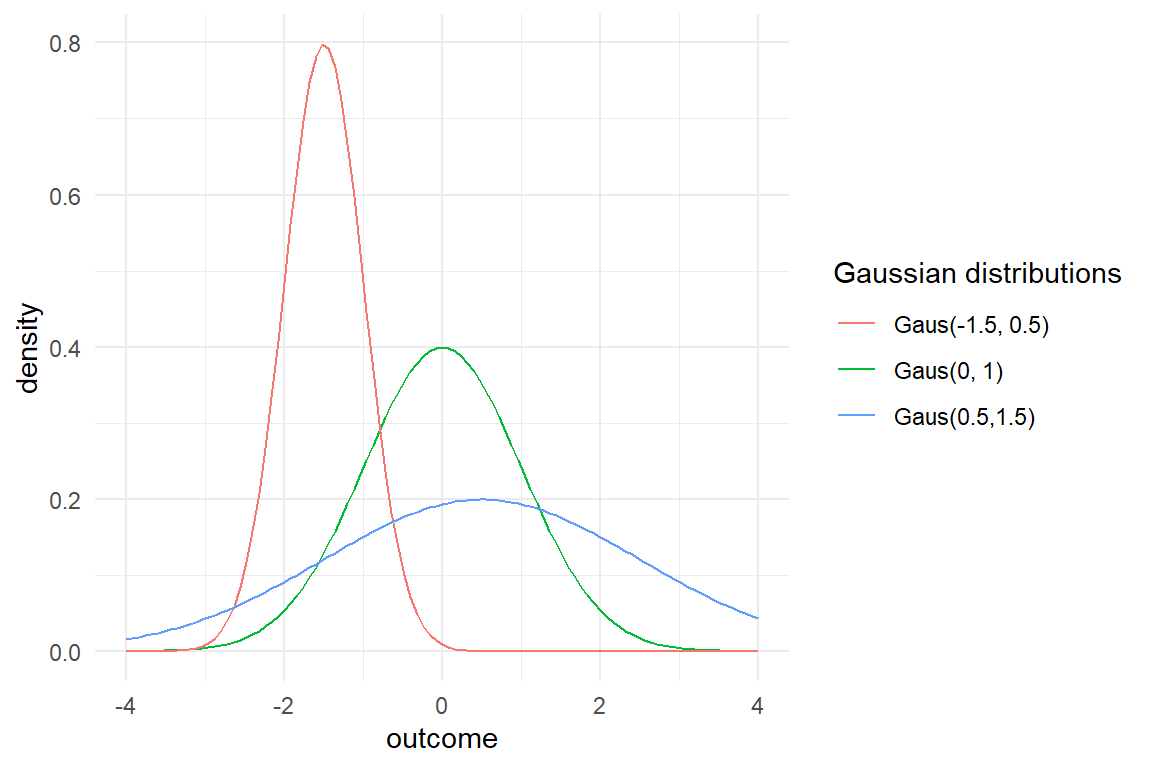

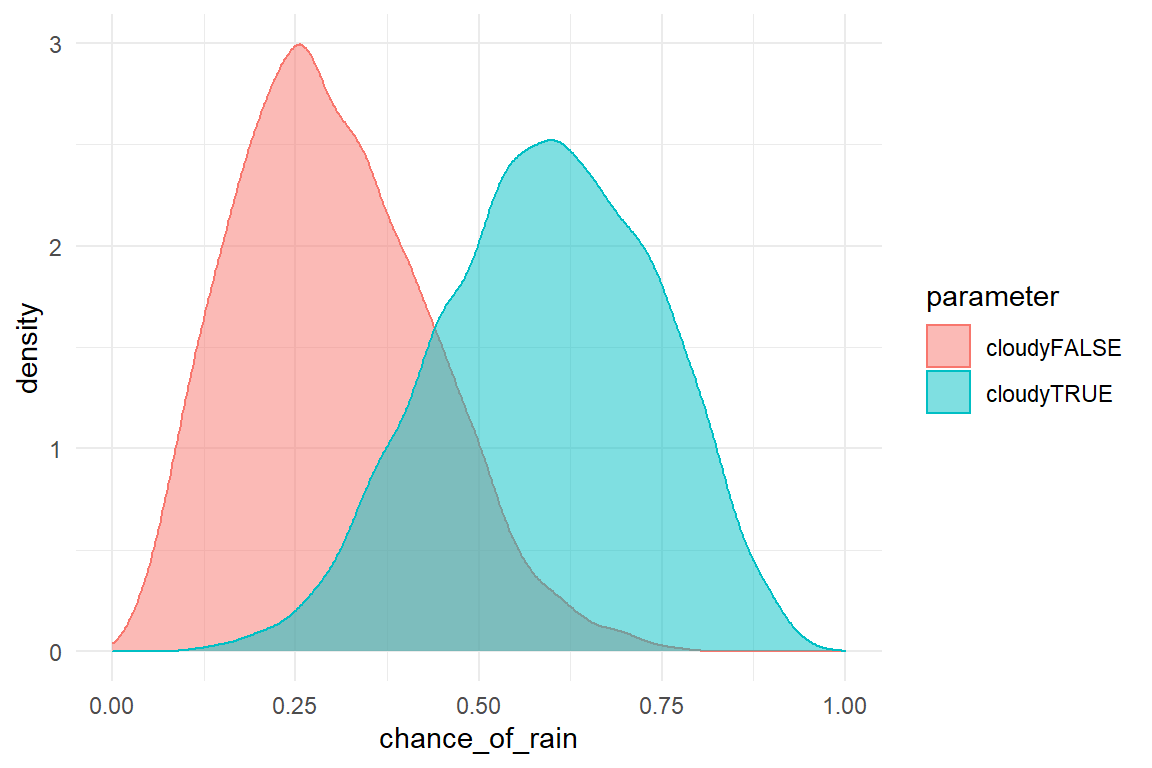

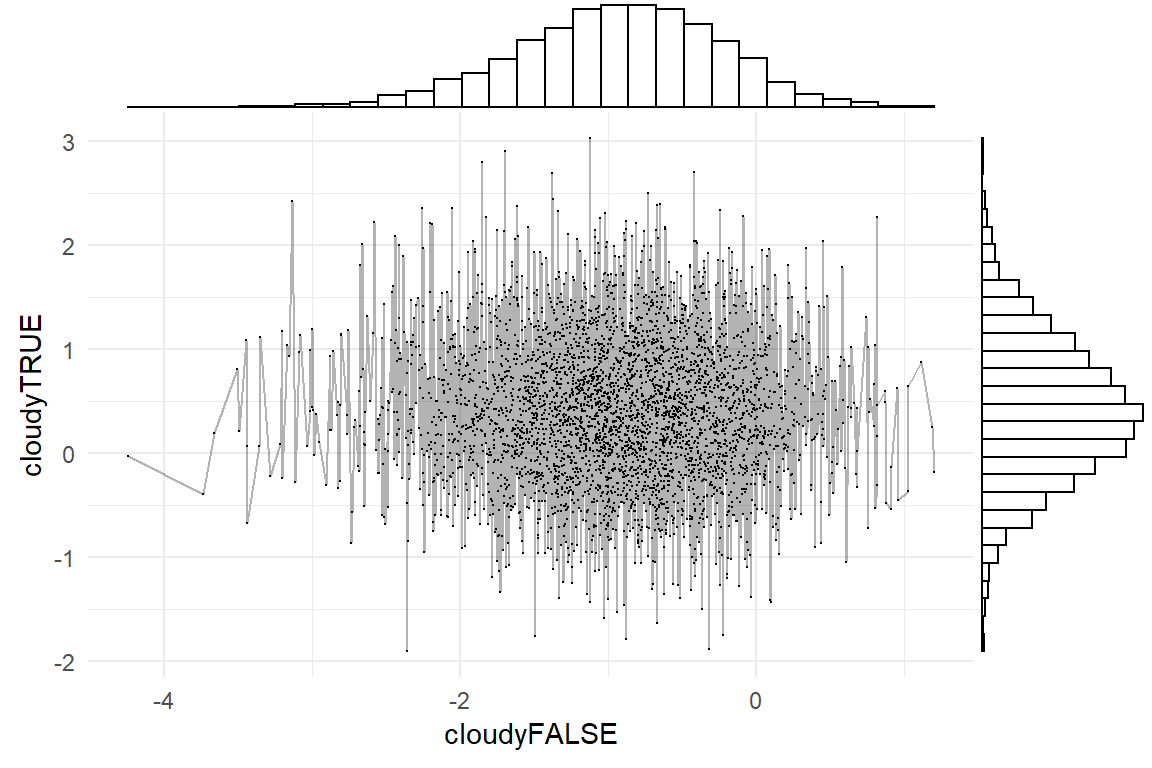

- the probability that a theory is more true than another (there’s only this pair)